There is a specific moment in large AI programs when you can tell something is about to come apart. It is rarely dramatic. Sometimes it is a pause, A breath, Someone scrolling through a document they supposedly wrote, trying to reconcile two sentences that should not contradict each other. The tension shows up in micro-movements before it shows up anywhere measurable.

And the strangest part? You check the system, and everything looks fine. Infrastructure looks fine, pipelines look fine. Monitoring dashboards look uneventful. The models are still doing exactly what they were trained to do, but the only thing misbehaving is the organisation itself, usually in ways people pretend not to notice because acknowledging them requires rewriting half the assumptions holding the program together.

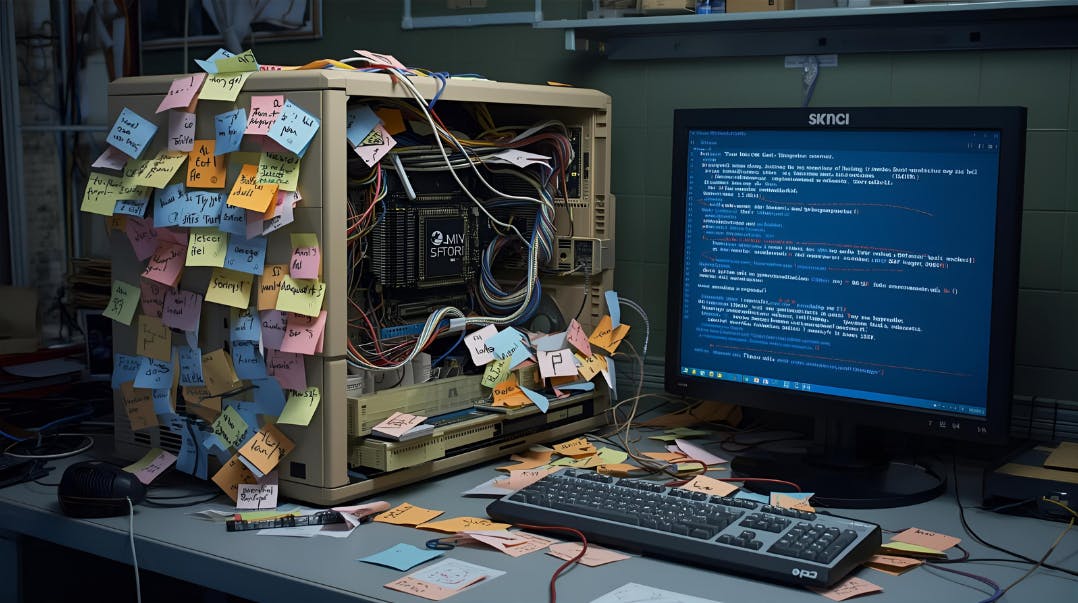

By November 2025, the discrepancy is not philosophical. It is visible. AI systems have outpaced the people, processes, and decision pathways meant to run them. They move faster than governance, faster than agreements, faster than politics, faster than the illusions of alignment people rely on to get through meetings. You eventually understand that the real failure mode is not technical. It is organisational. And it feels a lot like watching an outdated OS crash under a workload it was never built for.

The Human OS From 1998

If you stay in this world long enough, you begin to sense the age of the operating system running underneath everything. It feels old in a tactile way, not old as in “legacy code,” but old as in “built before anyone imagined systems would need to coordinate at this level.”

You see it in small things first: an approval cycle stretched over weeks because three people must agree on who should write the summary, a slack thread where everyone speaks confidently about a decision made in a meeting you know for a fact ended with no decision, a document labelled “Final_3_REV2” that omits the one constraint that half the architecture depends on.

Modern AI expects parallelism, and humans, inside enterprises, behave like a single-threaded process pretending it can multitask because it has many windows open. They pass context like fragile packets no one tracks, and they assume other teams share definitions they have not checked in years. The Human OS has no concept of concurrency; it was designed for a time when it was acceptable for a decision to take a month and still be considered timely.

After multiple cycles across pre-sales, architecture reviews, delivery oversight, and the weird limbo where strategy becomes execution, you stop expecting anything else. You stop being surprised by how long context takes to propagate. The OS boots, barely. But only because people have learned to avoid asking it to run anything built after 2015.

Misalignment Drift: The First Symptom

Misalignment does not arrive fully formed; it comes disguised as clarity. Someone rephrases a requirement, a team reinterprets a constraint, and a diagram gets redrawn with slightly different boundaries. No one calls it drift because the participants believe they are improving clarity. They are not. They are forking the system state.

Later, someone else builds on the new interpretation. A third team quietly assumes the old meaning. A fourth team writes a timeline based on an assumption that only one person made, but forgot to declare. Weeks pass. The drift becomes distributed. No one can pinpoint when it started, because each microscopic shift made sense at the time.

You see it in meetings where two groups discuss what sound like two different projects, even though the document title is identical. You see it when engineers quietly look confused but say nothing because the confusion feels too small to escalate—the misery compounds. AI systems, especially those with continuous learning loops, cannot tolerate mismatched assumptions. A single misunderstood variable infects the entire chain.

Eventually, you realise misalignment spreads the way memory leaks do, not obvious, not catastrophic, just slowly eroding the system until the whole thing stutters. The architecture still looks correct. The understanding does not.

Decision Latency Collapse: When Time Starts Working Against You

There is a moment when everything slows down, even though no one admits it. Work gets done. Tasks move. People reply. But nothing moves forward. It is as if time thickens around the program. A decision that should require hours waits behind an approval path that has no business being as long as it is. And because the delay is distributed, you cannot point to a single culprit.

If you trace any stalled decision backwards, you discover the same pattern: a queue inside a queue inside a queue. A governance lead is waiting on clarification. A director is waiting for a summary. A summary is waiting on an internal sync, and a sync is waiting on an answer from a team that has not been told they are the dependency.

Late 2025 AI workflows assume decision-making will behave like event-driven architecture, fast, triggered by change, and responsive. But the Human OS resolves decisions in batches. It handles one thing at a time, in order, in a world where the order no longer maps to reality.

You end up with organisational race conditions. Two decisions that require resolution at the same moment are resolved sequentially. The second decision arrives after the system state has already shifted, making the decision obsolete. This is not incompetence. It is latency. And once latency takes over, collapse becomes a matter of time.

Incentive Decoherence: The System Stops Sharing the Same Reality

If drift is quiet and latency is invisible, incentive decoherence is loud. It is the moment when the organisation stops sharing the same reality. You see it not through process failures but through language that means different things depending on who speaks.

Sales expands the scope to win the deal. Delivery compresses the scope to hit deadlines. Architecture reframes scope to maintain integrity. Governance adds scope to mitigate risk. Leadership asks for velocity and certainty simultaneously. Each group is acting rationally according to its incentives. The problem is that these incentives point in opposing directions.

One day, you hear three teams describe the “MVP” using three entirely incompatible definitions. None of them realise the contradiction because each believes their definition is the obvious one. It is not malicious. It is structural. There is no shared optimisation function holding the system together.

No distributed system can maintain coherence when each node is incentivised to behave differently. Humans are no different. Once incentive decoherence sets in, you are no longer aligning people—you are negotiating with physics. And physics wins.

The Panic: When AI Outruns the Human OS

The organisational kernel panic does not begin with a meltdown. It begins with a contradiction that no one can unwind. A design decision that invalidates a contract. A dependency that contradicts a governance rule. A performance requirement that negates a timeline. The system enters a state that cannot be resolved without breaking something people are culturally unwilling to break.

Agentic systems. Vertical AI stacks. Multi-model orchestration. The entire 2025 AI ecosystem assumes consistency as a default state. The Human OS does not provide it. So when drift, latency, and decoherence combine, the organisation hits a state with no valid forward path.

Meetings become circular. Ownership dissolves. Roadmaps fold into themselves. People start repeating sentences that sound like progress but describe nothing. Everyone senses the system is stuck, but avoids naming it because naming it means acknowledging the OS cannot scale to the demands placed on it.

And the truth becomes impossible to ignore: the AI did not fail. The humans running it did.

Debugging the Human OS (Without Pretending It’s Painless)

You cannot debug an organisation the way you debug code. You cannot replicate the issue. You cannot isolate the process. You cannot step through the failure using logs, because the logs are conversations, assumptions, and half-forgotten decisions scattered across channels no one audits.

But you can spot patterns that precede collapse. A requirement phrased differently across documents. A team describing architecture using metaphors instead of details. A decision everyone believes is final, even though no one remembers who made it. These are not minor issues. They are early signs of drift, latency, and decoherence.

Recovery begins when someone stops pretending everything is aligned. When someone maps the real dependency graph instead of the politically safe one. When someone acknowledges that governance running slower than model iteration guarantees failure. When alignment is treated as a volatile state that must be revalidated regularly, not a ceremony performed quarterly.

AI roadmaps recover only when the organisation finally admits the technical system is not the fragile part. The Human OS is.

The Quiet Crash We Keep Blaming on AI

AI almost never breaks by itself. It breaks because the organisation cannot keep up with what the system demands. The Human OS stalls. It contradicts itself. It splits into multiple inconsistent realities and refuses to merge back. And every time this happens, people point at the model. Easier to blame the thing without feelings.

The real challenge has never been scaling models. It is scaling the organisational logic needed to operate them. Until the Human OS evolves, the kernel panic will repeat, quietly, subtly, predictably—and always at the point where the system asks the humans to move faster than they are structurally capable of moving.