We have all heard the phrase that data is the new oil. Most of the time we think about that in terms of spreadsheets or click streams or logs of user behavior. But there is a massive chunk of human existence that remains messy and unstructured. I am talking about your voice.

For decades engineers have treated voice as a secondary problem. It was something to be transcribed into text so that we could actually do something with it. However the transition from sounds to data is anything but simple. Recently a fascinating conversation on the Stack Overflow podcast featured Scott Stephenson who is the chief executive of Deepgram. His journey from a particle physicist to a voice AI pioneer tells us a lot about why your voice is a massive data problem.

From Dark Matter To Deep Learning

It turns out that finding dark matter and understanding a noisy phone call have a lot in common. Scott spent years deep underground in China building detectors designed to catch cosmic radiation. These machines were looking for tiny signals in a sea of noise. They had to digitize waveforms at incredible speeds with extremely low latency to catch particles that might only interact with matter once in a blue moon.

When he looked at the world of audio back in 2015 he saw a similar challenge. The industry leaders at the time told him that deep learning would never work for voice. They claimed that the only way to handle speech was through a complex series of steps like guessing phonemes or using statistical models to rank candidate words. To a physicist this looked like a mess of lossy connections.

If you lose a little bit of signal at every step you end up with a result that is barely usable. The vision for Deepgram was to skip all those intermediate steps. They wanted a full end to end model where the data writes the model itself. This approach was considered impossible by the incumbents but it paved the way for the speech to text speeds we see today.

The Problem With Dialects And Noise

If everyone spoke like a news anchor in a quiet studio voice AI would be a solved problem. But humans do not speak that way. We use slang and we have thick accents and we often talk over the sound of a blender or a busy street.

The traditional way of building voice systems relied on human experts to define rules for how language works. But as soon as you move from a clean office to a drive through window those rules break. The modern solution is to treat voice as a raw signal. By training on massive datasets that include messy real world audio the models learn to ignore the noise and focus on the intent.

There is still a big gap though. We have great models for English but the long tail of global dialects remains a challenge. This is where synthetic data comes in. Some companies want to use machines to generate audio to train other machines. Scott points out that this only works if the generator is an incredible compressor of the world. You need a world model that understands the context of a conversation and not just the sounds of the words.

Why Voice Cloning Is A Weapon

We cannot talk about voice AI without touching on the ethics of cloning. We have all seen the stories about fraud where a loved one calls for help but it is actually a machine. This is a dark side of the technology that many companies are struggling to manage.

Deepgram takes a firm stance here by not allowing unfettered voice cloning. The logic is simple. If a tool can be used to scam someone it is a weapon. The goal for the future is to create responsible systems that include watermarks. Imagine a world where you also have a companion tool that tells you if the voice on the other end of the line is a human or a synthetic generation. It is an arms race where the defense must be as sophisticated as the attack.

The Future Of Real Time AI

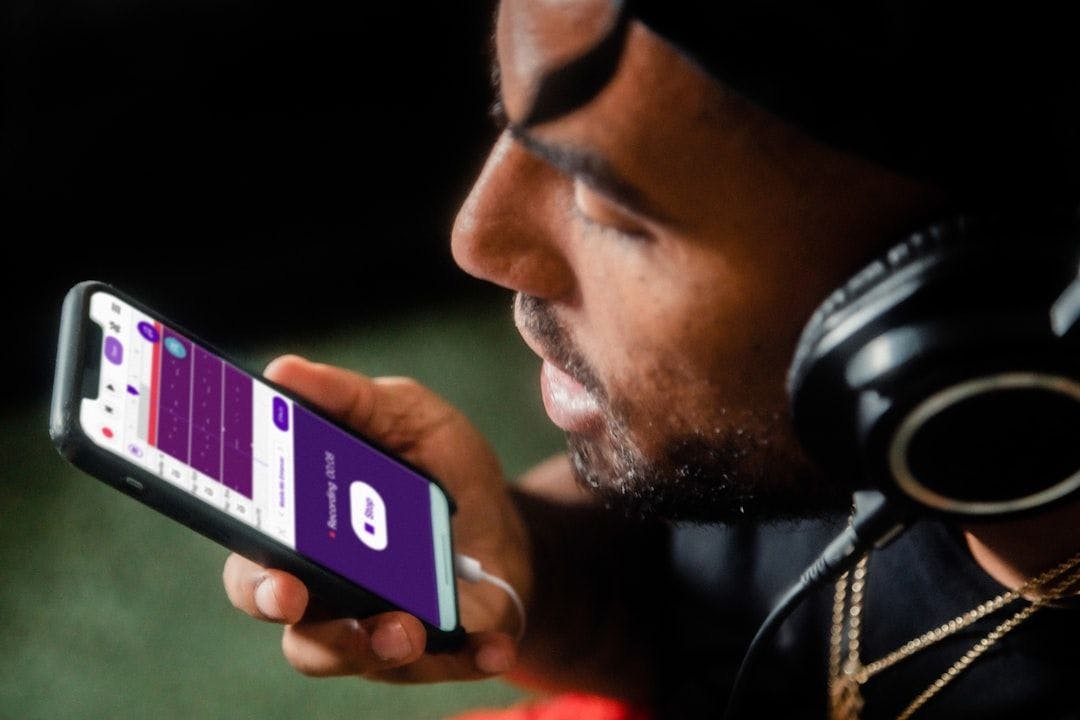

We are moving toward a world where humans talk to machines as naturally as they talk to friends. We might soon see a billion simultaneous connections between people and AI agents. This requires an infrastructure that can handle bidirectional streaming with almost no delay.

Voice is effectively the first major test case for real time AI. Unlike a text prompt where you can wait a few seconds for a response voice requires immediate feedback. If a voice agent hesitates for even a second the illusion of a conversation is shattered.

The takeaway for developers is that voice data is finally becoming accessible. We are moving away from the old days of expensive and inaccurate systems. As we build these new interfaces we have to remember that a voice is more than just words. It carries emotion and intent and identity. Handling that data with care is the next great challenge for the tech industry.