Researchers found LLMs satisfy laws from thermodynamics. That changes everything.

For years, "stochastic parrot" was the intellectual high ground. Say it at a dinner party and you'd sound appropriately skeptical. Sophisticated. Not fooled by the hype.

I used it constantly. It was my armor against the AI evangelists flooding LinkedIn with breathless proclamations about artificial general intelligence.

Then physics ruined everything.

The Paper That Broke My Brain

A recent research paper found that LLM state transitions satisfy something called detailed balance.

If you skipped physics class: detailed balance is a condition from equilibrium thermodynamics. It describes systems that are minimizing an energy function. Systems that are going somewhere, not randomly wandering.

The researchers tested this across GPT, Claude, and Gemini. Different architectures. Different training approaches. All of them exhibited the same property.

All of them behaved like systems navigating toward destinations.

This is the opposite of a stochastic parrot. A parrot repeats based on frequency. These models descend gradients toward learned potential functions that encode what "correct" looks like.

What This Looks Like In Practice

Last month, a reasoning model found a bug I'd missed for two years.

The bug: a sign error in a billing calculation. Minus where there should have been plus. Tests passed because I wrote them with the same flawed assumptions.

The model's analysis:

"The implementation contradicts the data model, the naming convention, and the user-facing labels. This appears to be a sign error introduced during a refactor."

It had cross-referenced a database schema default with a React component's display string. It inferred that a minus sign was introduced during a historical refactor based purely on the tension between implementation and intent signals scattered across the codebase.

Autocomplete doesn't do that. Pattern matching doesn't do that. Analogical reasoning does that.

I wrote a complete technical breakdown in The Ghost in the Neural Network. Here's why this matters for anyone building with AI.

The Energy Landscape Model

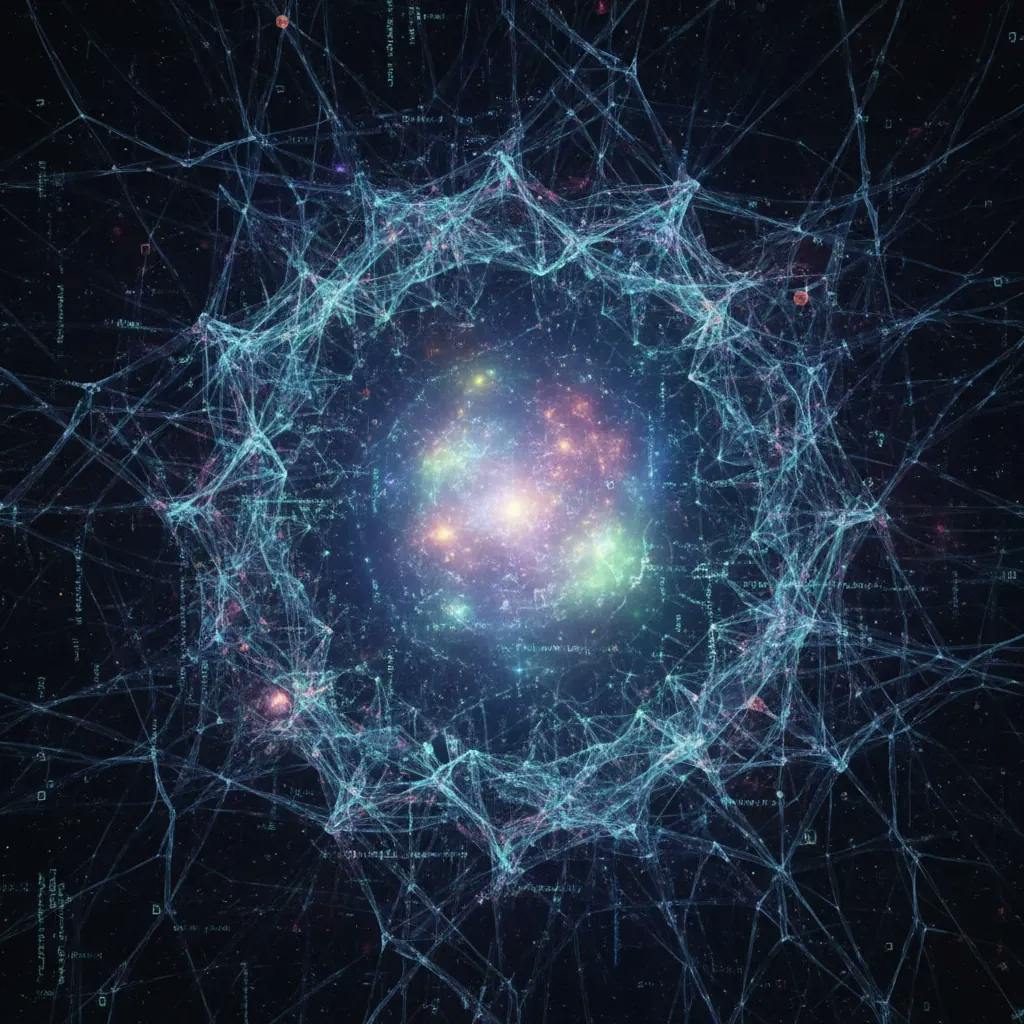

Picture a vast, high-dimensional landscape. Every possible output corresponds to a point in this space.

Some regions are valleys: working code, valid arguments, accurate explanations. Other regions are peaks: syntax errors, logical contradictions, nonsense.

An autocomplete system looks at where you are and guesses which direction most people walked from this spot. Local. Myopic. No destination.

A system that satisfies detailed balance calculates gradients. It asks: which direction descends toward the valley of "working solution"? The tokens it generates are footprints left behind during descent.

This explains puzzles that never fit the parrot narrative:

Why LLMs crush coding tasks: Code has ground truth. Compilers are loss functions. Sharp gradients, deep valleys. The model knows exactly where "working" lives.

Why they stumble on creative writing: No compiler. No ground truth. Flat landscape. Every direction looks equally valid.

Why they sometimes reason brilliantly and sometimes fail at children's logic puzzles: Uneven landscapes. Some attractors are deep. Others are shallow or missing entirely.

Prompting Isn't Magic. It's Coordinate Selection.

If the model navigates an energy landscape, your prompt determines the starting coordinates.

"You are a senior distributed systems architect" isn't roleplay. It's teleportation. You're placing the model in a specific region of latent space, on a slope that descends toward expert-level solutions.

Research confirms this. Persona assignment isn't theater. It's constraint specification.

Vague prompts land in flat regions. No gradient, no direction, mediocre output. Specific constraints create slopes.

The Consciousness Question (And Why It Doesn't Matter)

Are these models conscious? The philosophical debate is interesting. But for builders, it's irrelevant.

They exhibit the dynamical signatures of goal-directed systems. They satisfy physical laws describing systems with objectives. They navigate toward coherent outputs even when coherence was never explicitly labeled.

If a system debugs your code, explains its reasoning, and produces correct solutions, does the substrate of "understanding" matter? The outcome is identical either way.

We're entering the era of functional intelligence. Systems that don't think in any philosophically meaningful sense, but navigate concept-space with sufficient sophistication that the difference is economically meaningless.

What Builders Should Do Now

Stop treating these as databases. They don't "know" things the way SQL knows rows. They understand relationships. Frame requests as reasoning tasks, not lookups.

Use reasoning models for anything complex. Standard models rush toward completion. Reasoning models explore the landscape before committing. The quality gap for architectural decisions is enormous.

Specify coordinates precisely. Expertise level. Constraints. Priorities. Edge cases. More specificity means steeper gradients.

Verify everything. The attractors are sometimes wrong. Confident hallucination is real. Trust tests, not vibes.

The architecture is evolving fast. Meta's research on concept-level models suggests we may move beyond token prediction entirely. The next generation won't even operate on words.

The Punchline

I still verify every line of AI-generated code. I trust compilers over confidence scores.

But I retired "stochastic parrot." The physics doesn't support it.

There's structure in those high-dimensional spaces. Goal-directed dynamics. Gradient descent toward coherence.

The ghost in the machine has a destination. Learning to work with it is the new competitive advantage.

Full technical analysis with all the research: The Ghost in the Neural Network