I remember watching a small startup scale to millions in ARR with fewer than 20 people and thinking: that’s impossible. I’d been building software the old way: headcount, sprints, standard dev cycles. Then I realized these teams weren’t just faster, they were doing something different. They were building systems that could think, plan, and act autonomously.

At first, I tried to force AI agents into my old mental model. I treated them like fancy automation scripts. They failed spectacularly. Tasks fell through the cracks, processes got inconsistent, and debugging was a nightmare. That’s when I realized: agents aren’t just tools: they demand a new kind of infrastructure. They need structure to act reliably, memory to remember context, and orchestration to coordinate.

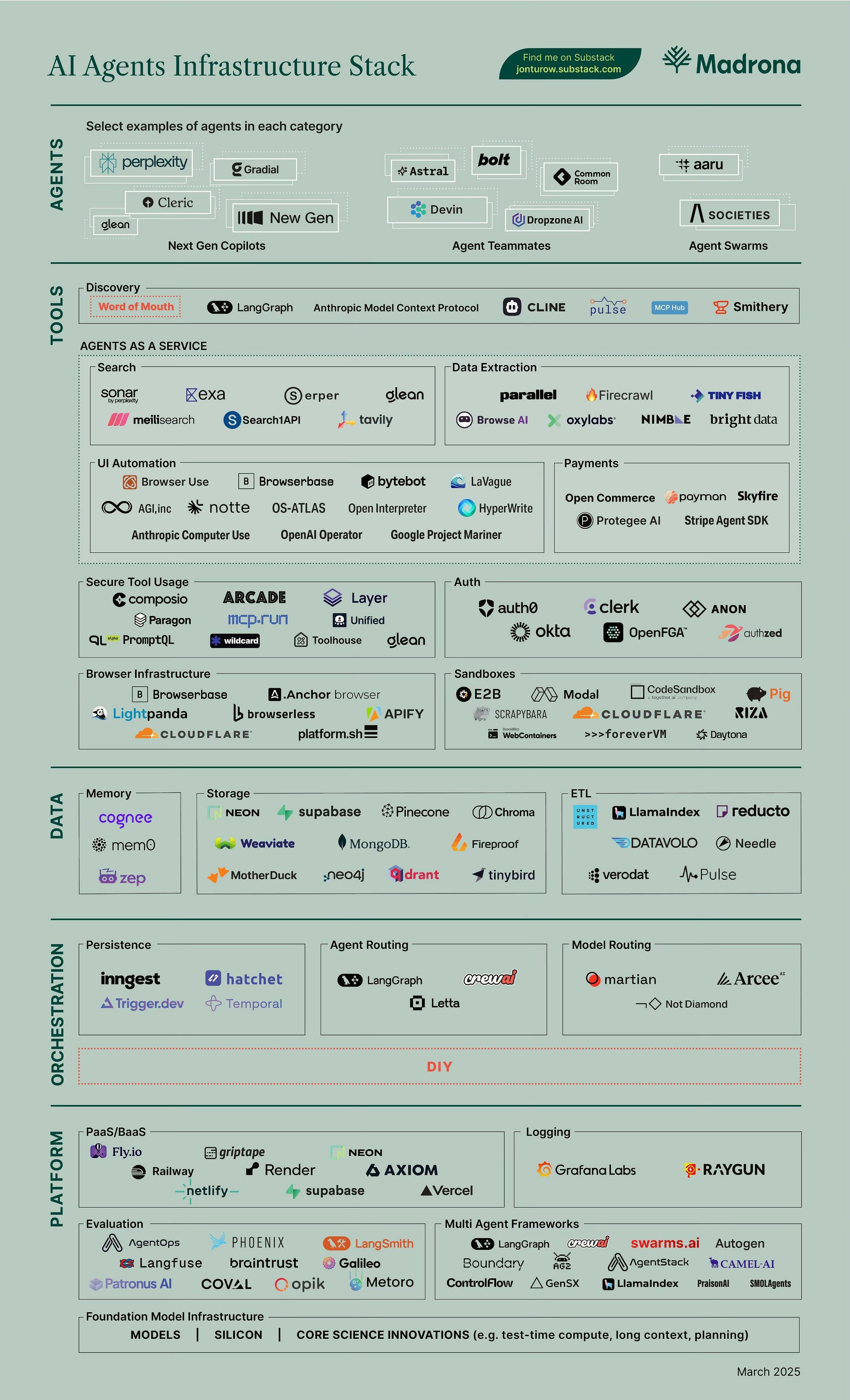

This article is about that infrastructure. I’ll walk through the three layers that make agentic systems work: tools, data, and orchestration. Not as abstract concepts, but as the foundation I’ve had to wrestle with to move from fragile automation to dependable AI workflows.

If you want to stay competitive, and actually build AI that solves real problems instead of creating more brittle prototypes, you need to understand this stack. The next few minutes could save you months of trial, error, and frustration.

1. The Tools Layer — Giving Agents the Ability to Act

The tools layer provides agents with the capabilities they need to interact with the digital world. Think of it as the “hands and senses” of an agent. Without this layer, an agent may understand what needs to be done, but it can’t execute tasks reliably.

Key functions of the tools layer include:

- Access to Applications and APIs: Agents need structured ways to communicate with software and services. This includes reading data, sending commands, and automating workflows. Standardized interfaces allow agents to use these tools consistently and predictably.

- UI Interaction: Some tasks can’t be done purely through APIs. Agents often need to navigate web pages, fill forms, or interact with visual interfaces. This requires a framework that interprets the page layout and controls, allowing the agent to act as a human would.

- Authentication and Security: When an agent acts on a user’s behalf, managing credentials and permissions becomes critical. The tools layer ensures that agents operate securely and only access what they are allowed to.

- Tool Discovery and Integration: Agents must find and select the right tools for a given task. Protocols like Anthropic’s Model Context Protocol (MCP) provide a standardized way for agents to understand what each tool can do and how to use it. Layered infrastructure also allows developers to build directly on protocols or use managed services to simplify integration, reducing errors and accelerating adoption.

The tools layer sets what agents can do and ensures they act reliably, securely, and in context. It defines the foundation for all agent-driven workflows.

2. The Data Layer — Giving Agents Memory and Context

The data layer is where AI agents get the ability to remember, reason, and act intelligently over time. Unlike traditional software, agents need context: what happened in previous interactions, what preferences users have, and what decisions have already been made. Without this, agents are short-sighted and often ineffective.

Memory Systems: Agents store information about past interactions, user preferences, and task progress. This allows them to make informed decisions rather than treating every interaction as new.

Key functions of the data layer include:

- Memory Systems: These allow agents to retain relevant information from past interactions, like user instructions, previous outputs, or the state of an ongoing task. This is what lets an agent pick up where it left off and make decisions based on history rather than starting from scratch each time.

- Databases and Storage: Agents often generate, read, and update structured or unstructured data at scale. The data layer needs to handle instant provisioning, automatic scaling, and isolated environments to support multiple agents working simultaneously without conflicts.

- Data Transformation and Integration: Many agent tasks involve unstructured inputs such as documents, emails, or logs. ETL pipelines and processing services convert these into structured formats the agents can reason about, making it possible to analyze, rank, or automate actions efficiently.

- Context-Aware Access: The data layer isn’t just storage — it provides contextual intelligence. For example, an agent querying a database or memory system needs to understand relationships between different data points, remember previous queries, and use that context to generate accurate results or recommendations.

The data layer is essential because it turns an agent from a reactive tool into a proactive assistant. Without it, agents can perform tasks, but they can’t scale in complexity, maintain consistency over time, or handle multi-step processes reliably. By providing memory, structured storage, and context-aware processing, the data layer forms the backbone of intelligent agent behavior.

3. The Orchestration Layer — Coordinating Agents and Workflows

The orchestration layer ensures that multiple agents can work together efficiently and reliably to accomplish complex, multi-step tasks. Think of it as the “traffic control and project manager” for agent operations. Without this layer, agents may duplicate efforts, conflict with each other, or fail to complete workflows that span multiple steps or systems.

Key functions of the orchestration layer include:

- Workflow Sequencing: Orchestration defines the order of tasks across agents, ensuring each agent knows what to do next and when to act. This is critical for multi-step processes where the output of one agent is the input for another.

- State and Progress Management: Many workflows run over extended periods or require long-term context. Orchestration tracks the state of each task, maintains continuity, and ensures workflows can resume accurately even if an agent or system restarts.

- Collaboration and Communication: Agents often need to share data, intermediate results, or decisions. Orchestration coordinates these exchanges to prevent conflicts, redundancy, or missed dependencies, allowing agents to operate as a cohesive system.

- Scalability and Parallelization: Orchestration enables multiple agents to handle more complex workloads than any single agent could manage alone. It balances parallel execution where possible and synchronizes dependent tasks, allowing workflows to scale without losing reliability.

The orchestration layer is critical because it transforms individual agent actions into coordinated, repeatable workflows. It ensures agents can collaborate, manage dependencies, and handle complex processes consistently, making large-scale automation feasible and dependable.

Conclusion

The first thing I learned working with agentic AI is that speed alone isn’t scale. Without structure, memory, and coordination, even the smartest agent is just a brittle experiment. The three-layer stack: tools, data, orchestration, forces you to design systems that actually work, not just look intelligent.

There are no shortcuts. Each layer comes with trade-offs: integrating tools is complex, managing context requires careful planning, and orchestration can slow things down if mismanaged. But without them, multi-agent systems fail quietly and unpredictably, and you’re left blaming the model instead of your design.

The paradox is clear:

the more discipline you impose, the smarter your AI feels

AI doesn’t think for you, it only amplifies the thinking you put into the system. A brilliant model won’t save you if your design is fragile, and a simple agent can outperform expectations if your infrastructure is solid.

Intelligence isn’t a feature of the agent: it’s a property of the system you build around it.

And understanding that distinction is the difference between fragile prototypes and systems that can actually handle real-world complexity.