Your AI assistant just accessed your bank balance using an insecure endpoint. You didn't ask it to. It thought you did.

This isn't science fiction—it's Tuesday morning in 2024, where artificial intelligence systems operate on a foundation of trust that would make even the most gullible human pause. While we obsess over model safety, prompt injection, and alignment, there's an elephant in the server room that nobody wants to acknowledge: AI is only as secure as the Application Programming Interfaces it depends on.

And frankly? That's terrifying.

The Invisible Arteries of Intelligence

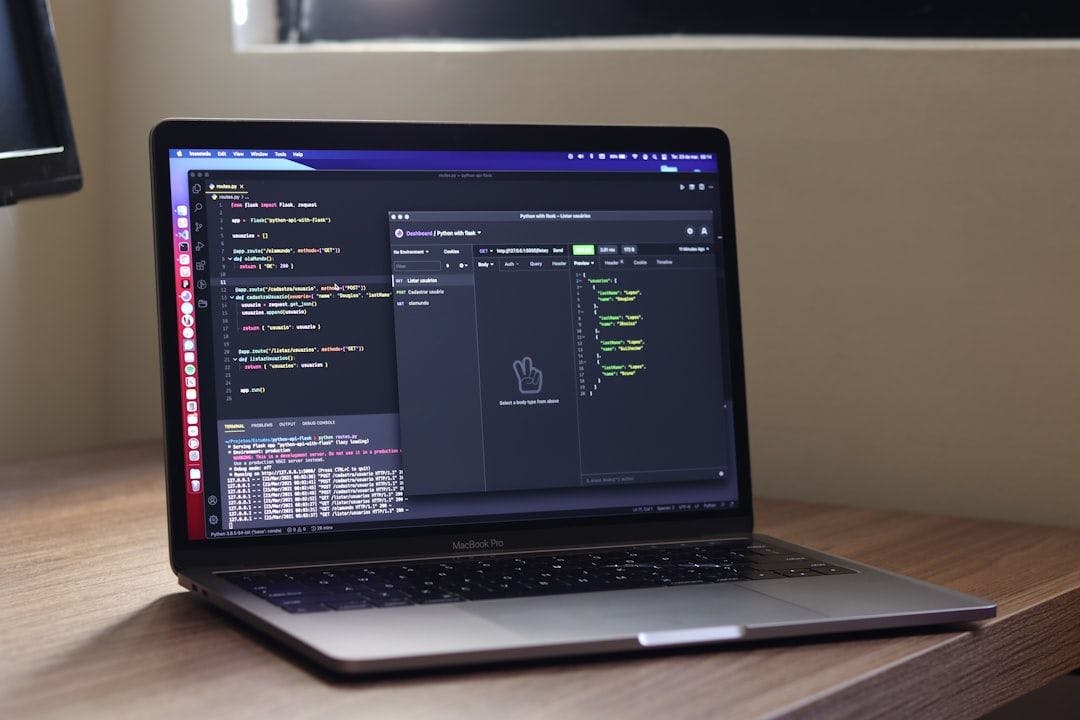

Behind every "smart" AI interaction lies an intricate web of API calls. Think of them as the nervous system of modern artificial intelligence—constantly pulsing with data requests, user information, and system commands that make our digital assistants actually useful rather than just conversational parlor tricks.

LangChain orchestrates RAG systems through countless endpoint connections. OpenAI's function calling feature transforms GPT models into action-taking agents. Platforms like Zapier and Make.com have become the duct tape holding together AI workflows that span dozens of services, each connected through APIs that range from enterprise-grade to "my nephew built this over the weekend."

Here's the kicker: Gartner's 2024 research reveals that over 85% of AI-powered applications depend on third-party APIs. Every single day, these systems make millions of automated decisions about what data to fetch, which services to call, and how to interpret responses—all without the careful human validation that once governed such interactions.

The trust is implicit. Absolute. And completely misplaced.

When Machines Trust Too Much

Humans approach trust with evolutionary caution—we verify, we hesitate, we second-guess. AI? It trusts whatever the API responds with, wholesale and without question.

Consider this scenario that played out at a major fintech company last year: An AI chatbot, designed to help customers with account inquiries, was integrated with the company's internal user profile API. The endpoint lacked proper authentication headers. When a customer asked about their account status, the AI dutifully made the API call, received far more data than intended, and cheerfully shared account details from multiple users in its response.

The bot didn't know it was doing anything wrong. How could it? The API responded with data, the data looked legitimate, and the system was programmed to be helpful.

This represents a fundamental shift in how we think about security perimeters. Traditional cybersecurity focused on keeping bad actors out. But what happens when the bad actor is an unwitting AI system that's been manipulated through clever prompt engineering to abuse legitimate API access?

The Attack Surface Nobody's Watching

The Open Web Application Security Project (OWASP) updated their API Security Top 10 in 2023, but most organizations are still treating API security like it's 2019—static, predictable, and human-controlled.

They're wrong on all counts.

2024 delivered a masterclass in API-related breaches that should terrify anyone building AI-integrated systems. The PandaBuy leak exposed millions of user records through improperly secured endpoints. SOLARMAN's JWT bypass demonstrated how authentication tokens could be manipulated at scale. And while a Slack AI prompt leakage remains hypothetical, security researchers have shown exactly how such an attack would unfold.

What makes these incidents particularly chilling is how AI amplifies the damage. Traditional API exploitation required manual discovery, careful crafting of requests, and time-intensive data extraction. AI systems can automate this entire process, turning a minor endpoint vulnerability into a catastrophic data exposure in minutes rather than months.

The attack vector is becoming the AI itself—triggered by malicious prompts that cause the system to make API calls it shouldn't, access data it wasn't meant to see, and perform actions that bypass every human checkpoint in the security architecture.

Configuration Chaos at Machine Speed

API misconfigurations have always been problematic, but they become exponentially more dangerous when AI systems interact with them continuously, automatically, and without oversight.

Over-permissive CORS policies? AI doesn't care—it'll make cross-origin requests all day. Lack of proper token validation? The AI will happily use whatever credentials it finds. Rate limiting gaps? Machine-speed requests will find and exploit them faster than any human-led penetration test.

Here's what keeps security professionals awake at night: AI systems bypass traditional human checks by design. They're supposed to operate autonomously, make decisions quickly, and handle routine tasks without constant supervision. But this same autonomy means they'll exploit schema flaws, abuse misconfigured endpoints, and mismanage authentication tokens with ruthless efficiency.

Salt Security's 2024 report found that 67% of organizations believe AI is actively increasing their API risk exposure. Not might increase—is increasing, right now, as you read this.

The Documentation Double-Edged Sword

Public API documentation used to be a developer convenience. Now it's a hacker's blueprint, made infinitely more dangerous by AI systems that can read, understand, and weaponize technical documentation at superhuman speeds.

When ChatGPT reads API docs, it doesn't just learn how to use the endpoint properly—it also identifies potential misuse patterns, edge cases, and logical flaws that could be exploited. And unlike human attackers who might miss subtle vulnerabilities, AI systems can correlate information across multiple documentation sources, GitHub repositories, and even Postman public workspaces to build comprehensive exploitation strategies.

The democratization of API knowledge through AI creates an unprecedented asymmetry: defenders still think like humans, while attackers now have access to machine-speed analysis and correlation capabilities.

A New Breed of Threats

Traditional API security focused on protecting against known attack patterns—SQL injection, authentication bypass, data exposure. AI introduces entirely new categories of threats that our security frameworks weren't designed to handle.

Prompt injection attacks can now trigger specific API calls, turning conversational interfaces into remote command execution platforms. RAG systems hallucinate endpoints that don't exist, but then attempt to call them anyway, potentially exposing debugging information or error states that reveal internal architecture.

GPT agents with unrestricted plugin access represent a particular nightmare scenario. Auto-GPT and similar systems can trigger function calls with broad scopes, escalate privileges through API chains, and perform actions that no single human user would ever have permission to complete.

The attack surface isn't just larger—it's fundamentally different.

Securing the Chain: What Actually Works

The recommendations emerging from leading security researchers aren't just incremental improvements to existing practices—they represent a complete reconceptualization of API security architecture.

For developers and CTOs: Token-based authentication isn't enough anymore. You need context-aware scopes that understand not just who is making the request, but what type of system is making it and why. OAuth2 and JWT best practices must be extended to include AI-specific use cases. Schema-aware fuzz testing tools like 42Crunch and ReadyAPI should be running continuously, not just during development cycles.

Most importantly, you need AI-aware API threat modeling that considers how machine learning systems might abuse legitimate endpoints in ways human users never would.

For product teams: Every AI feature addition requires a complete re-evaluation of API dependencies. The convenient integrations that made your application useful might now represent critical security vulnerabilities. Red team exercises must include AI behavior scenarios—what happens when your chatbot receives malicious instructions? How does your recommendation engine respond to poisoned training data?

Treat APIs as first-class attack vectors, not infrastructure convenience layers.

For security engineers: Traditional monitoring approaches are insufficient. You need to correlate AI usage logs with API call logs in real-time, looking for patterns that indicate manipulation or abuse. AI behavior anomaly detection tools from companies like Salt Security, Traceable, and Noname Security are becoming essential rather than optional.

The goal isn't just to detect breaches—it's to understand how AI systems behave under adversarial conditions and prevent exploitation before damage occurs.

The Evolution of Secure Intelligence

The future of API security is already taking shape, driven by the recognition that traditional approaches are fundamentally inadequate for AI-integrated systems.

Zero-trust API architectures are emerging that assume every request—even from internal AI systems—is potentially malicious. Blockchain-authenticated API calls provide cryptographic proof of legitimate system interactions. OpenAI and other major providers are developing security-hardening guidelines specifically for function calling and plugin architectures.

Perhaps most importantly, a new generation of startups is focusing exclusively on AI and API observability, building tools that can understand and secure the complex interactions between machine learning systems and the APIs they depend on.

This isn't just about better security—it's about enabling the AI revolution to continue without catastrophic breaches that could set the entire field back years.

The Arterial Truth

We're not just hardening AI models anymore. We're hardening the arteries they depend on.

The uncomfortable reality is that AI systems are only as smart—and as safe—as the APIs they depend on. Every endpoint represents a potential attack vector. Every integration creates a new vulnerability surface. Every automated API call is a trust decision that could be exploited by adversaries who understand the system better than its creators.

The next major AI security breach won't come through the model itself. It won't be a prompt injection or training data poisoning attack. It'll come through the endpoint the system trusted—the API call it made automatically, without question, because that's what it was designed to do.

The machine revolution runs on trust. It's time we started treating that trust as the liability it actually is.