Authors:

(1) Samson Yu, Dept. of Computer Science, National University of Singapore ([email protected]);

(2) Kelvin Lin. Dept. of Computer Science, National University of Singapore;

(3) Anxing Xiao, Dept. of Computer Science, National University of Singapore;

(4) Jiafei Duan, University of Washington;

(5) Harold Soh, Dept. of Computer Science, National University of Singapore and NUS Smart Systems Institute ([email protected]).

Table of Links

- Abstract and I. Introduction

- II. Related Work

- III. PhysiClear - Tactile and Physical Understanding Training & Evaluation Suite

- IV. Octopi - Vision-Language Property-Guided Physical Reasoning

- V. Experimental Setup

- VI. Experimental Results

- VII. Ablations

- VIII. Conclusion and Discussion, Acknowledgements, and References

- Appendix for Octopi: Object Property Reasoning with Large Tactile-Language Models

- APPENDIX A: ANNOTATION DETAILS

- APPENDIX B: OBJECT DETAILS

- APPENDIX C: PROPERTY STATISTICS

- APPENDIX D: SAMPLE VIDEO STATISTICS

- APPENDIX E: ENCODER ANALYSIS

- APPENDIX F: PG-INSTRUCTBLIP AVOCADO PROPERTY PREDICTION

- Appendix for Octopi: Object Property Reasoning with Large Tactile-Language Models

V. EXPERIMENTAL SETUP

In this section, we evaluate the physical property prediction and reasoning capabilities of our proposed method. We design several experiments to answer the following questions:

-

Are our physical property predictions useful for OCTOPI to reason about everyday scenarios?

-

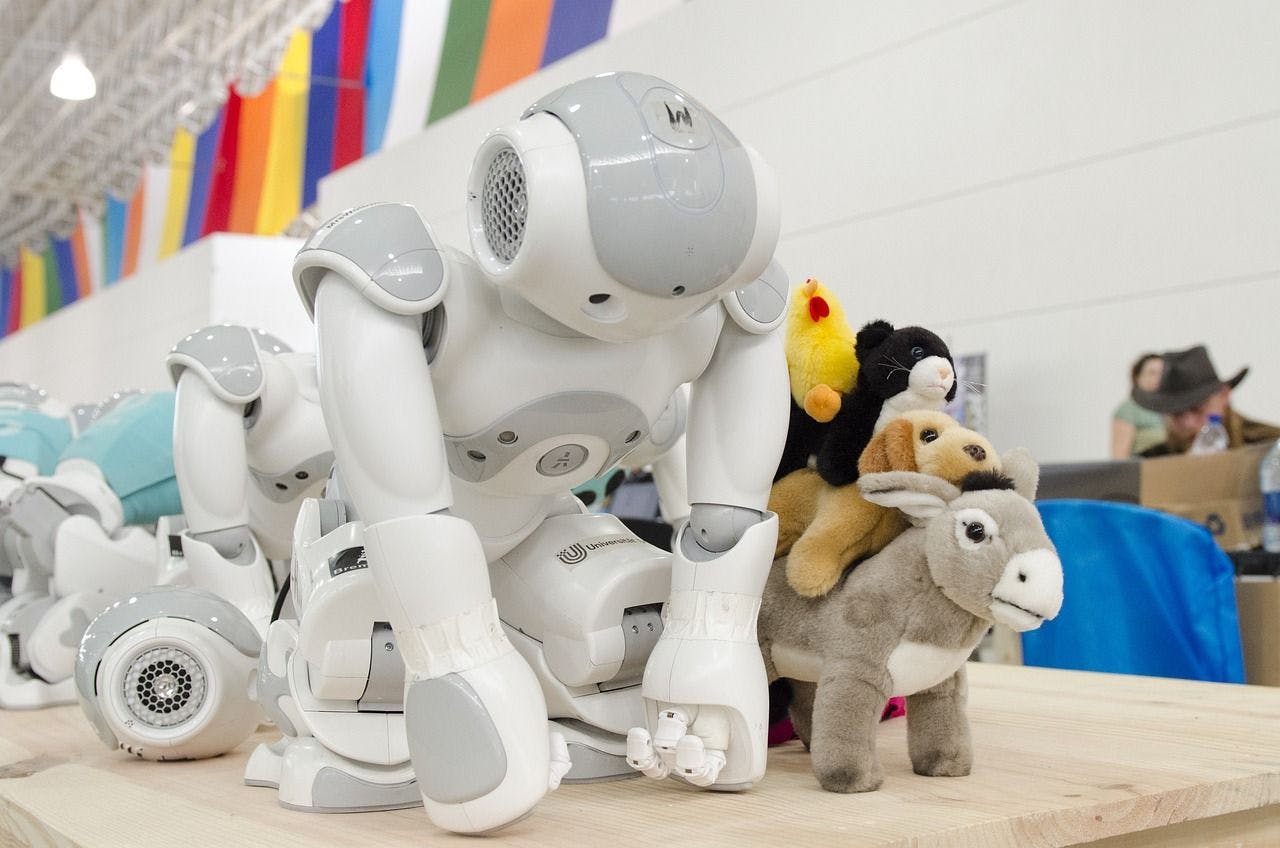

Can OCTOPI be used in real robots to help them accomplish tasks using physical reasoning?

-

Can OCTOPI’s understanding of the physical properties generalize to the unseen daily life objects?

A. Data Processing

The tactile videos were processed into frames. To focus on a few salient frames for better efficiency, we selected frames that have the top 30% total pixel intensity difference with their preceding frames. We randomly sampled 5 frames from these salient frames during training and selected 5 frames at uniform intervals from the first salient frame during evaluation. Data augmentation was performed during training in the form of random horizontal and vertical flips with 50% chance for each flip.

B. Training Hyperparameters

C. Training Requirements

Encoder fine-tuning took 6 hours and required less than 5GB of GPU VRAM. Tactile feature alignment together with end-to-end fine-tuning took 5 hours for OCTOPI-7b and 6.5 hours for OCTOPI-13b. We used 1 NVIDIA RTX A6000 for OCTOPI-7b and 2 NVIDIA RTX A6000s for OCTOPI-13b.

This paper is available on arxiv under CC BY 4.0 DEED license.