If you’ve ever sat down to prep for an LLM interview and ended up with 30 tabs open on attention mechanisms, LoRA, and tokenization — you’re not alone.

I’ve been there too. What started as a quick study session would often spiral into a maze of overlapping blog posts, dense research papers, and forum threads that answered everything except the question I was trying to understand.

It wasn’t that the information wasn’t out there — it was. But it was scattered, overly academic, or buried in jargon. And when you’re trying to get interview-ready (or just understand how things really work), that kind of noise doesn’t help. It slows you down.

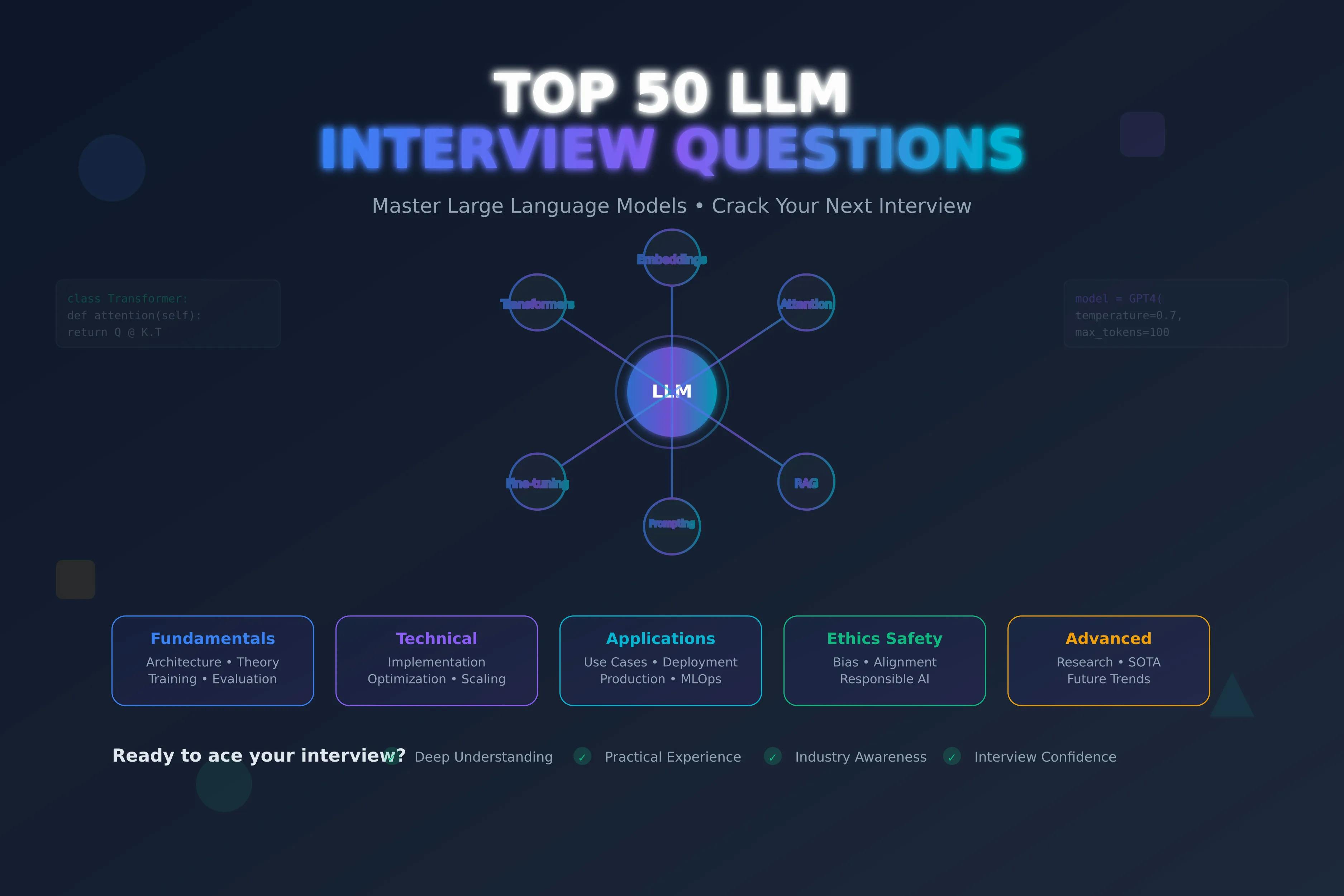

That’s exactly why I pulled together this guide: a curated list of the 50 most relevant Large Language Model (LLM) questions — the ones that consistently come up in interviews, real-world conversations, and practical projects. Each question comes with a clear, grounded answer that skips the fluff and gets straight to the “aha.”

Whether you’re brushing up for your next role, interviewing candidates, or just trying to deepen your grasp of how LLMs actually work, this is designed to save you hours of scattered research and help you focus on what matters most.

Let’s dive in.

Question 1: What does tokenization entail, and why is it critical for LLMs?

Tokenization involves breaking down text into smaller units, or tokens, such as words, subwords, or characters. For example, “artificial” might be split into “art,” “ific,” and “ial.” This process is vital because LLMs process numerical representations of tokens, not raw text. Tokenization enables models to handle diverse languages, manage rare or unknown words, and optimize vocabulary size, enhancing computational efficiency and model performance.

Question 2: How does the attention mechanism function in transformer models?

The attention mechanism allows LLMs to weigh the importance of different tokens in a sequence when generating or interpreting text. It computes similarity scores between query, key, and value vectors, using operations like dot products, to focus on relevant tokens. For instance, in “The cat chased the mouse,” attention helps the model link “mouse” to“chased.” This mechanism improves context understanding, making transformers highly effective for NLP tasks.

Question 3: What is the context window in LLMs, and why does it matter?

The context window refers to the number of tokens an LLM can process at once, defining its “memory” for understanding or generating text. A larger window, like 32,000 tokens, allows the model to consider more context, improving coherence in tasks like summarization. However, it increases computational costs. Balancing window size with efficiency is crucial for practical LLM deployment.

Question 4: What distinguishes LoRA from QLoRA in fine-tuning LLMs?

LoRA (Low-Rank Adaptation) is a fine-tuning method that adds low-rank matrices to a models layers, enabling efficient adaptation with minimal memory overhead. QLoRA extends this by applying quantization (e.g., 4-bit precision) to further reduce memory usage while maintaining accuracy. For example, QLoRA can fine-tune a 70B-parameter model on a single GPU, making it ideal for resource-constrained environments.

Question 5: How does beam search improve text generation compared to greedy decoding?

Beam search explores multiple word sequences during text generation, keeping the top k candidates (beams) at each step, unlike greedy decoding, which selects only the most probable word. This approach, with k = 5, for instance, ensures more coherent outputs by balancing probability and diversity, especially in tasks like machine translation ordialogue generation.

Question 6: What role does temperature play in controlling LLM output?

Temperature is a hyperparameter that adjusts the randomness of token selection in text generation. A low temperature (e.g., 0.3) favors high-probability tokens, producing pre-dictable outputs. A high temperature (e.g., 1.5) increases diversity by flattening the probability distribution. Setting temperature to 0.8 often balances creativity and coherence for tasks like storytelling.

Question 7: What is masked language modeling, and how does it aid pretraining?

Masked language modeling (MLM) involves hiding random tokens in a sequence and training the model to predict them based on context. Used in models like BERT, MLM fosters bidirectional understanding of language, enabling the model to grasp semantic relationships. This pretraining approach equips LLMs for tasks like sentiment analysisor question answering.

Question 8: What are sequence-to-sequence models, and where are they applied?

Sequence-to-sequence (Seq2Seq) models transform an input sequence into an output sequence, often of different lengths. They consist of an encoder to process the input and a decoder to generate the output. Applications include machine translation (e.g., English to Spanish), text summarization, and chatbots, where variable-length inputs and outputs are common.

Question 9: How do autoregressive and masked models differ in LLM training?

Autoregressive models, like GPT, predict tokens sequentially based on prior tokens, excelling in generative tasks such as text completion. Masked models, like BERT, predict masked tokens using bidirectional context, making them ideal for understanding tasks like classification. Their training objectives shape their strengths in generation versuscomprehension.

Question 10: What are embeddings, and how are they initialized in LLMs?

Embeddings are dense vectors that represent tokens in a continuous space, capturing semantic and syntactic properties. They are often initialized randomly or with pretrained models like GloVe, then fine-tuned during training. For example, the embedding for “dog” might evolve to reflect its context in pet-related tasks, enhancing model accuracy.

Question 11: What is next sentence prediction, and how does it enhance LLMs?

Next sentence prediction (NSP) trains models to determine if two sentences are consecutive or unrelated. During pretraining, models like BERT learn to classify 50% positive (sequential) and 50% negative (random) sentence pairs. NSP improves coherence in tasks like dialogue systems or document summarization by understanding sentence relationships.

Question 12: How do top-k and top-p sampling differ in text generation?

Top-k sampling selects the k most probable tokens (e.g., k=20) for random sampling, ensuring controlled diversity. Top-p (nucleus) sampling chooses tokens whose cumulative probability exceeds a threshold p(e.g., 0.95), adapting to context. Top-p offers more flexibility, producing varied yet coherent outputs in creative writing.

Question 13: Why is prompt engineering crucial for LLM performance?

Prompt engineering involves designing inputs to elicit desired LLM responses. A clear prompt, like “Summarize this article in 100 words,” improves output relevance compared to vague instructions. Its especially effective in zero-shot or few-shot settings, enabling LLMs to tackle tasks like translation or classification without extensive fine-tuning.

Question 14: How can LLMs avoid catastrophic forgetting during fine-tuning?

Catastrophic forgetting occurs when fine-tuning erases prior knowledge. Mitigation strategies include:

- Rehearsal: Mixing old and new data during training.

- Elastic Weight Consolidation: Prioritizing critical weights to preserve knowledge.

- Modular Architectures: Adding task-specific modules to avoid overwriting.

These methods ensure LLMs retain versatility across tasks.

Question 15: What is model distillation, and how does it benefit LLMs?

Model distillation trains a smaller “student” model to mimic a larger “teacher” model’s outputs, using soft probabilities rather than hard labels. This reduces memory and computational requirements, enabling deployment on devices like smartphones while retaining near-teacher performance, ideal for real-time applications.

Question 16: How do LLMs manage out-of-vocabulary (OOV) words?

LLMs use subword tokenization, like Byte-Pair Encoding (BPE), to break OOV words into known subword units. For instance, “cryptocurrency” might split into “crypto” and “currency.” This approach allows LLMs to process rare or new words, ensuring robust language understanding and generation.

Question 17: How do transformers improve on traditional Seq2Seq models?

Transformers overcome Seq2Seq limitations by:

- Parallel Processing: Self-attention enables simultaneous token processing, unlike sequential RNNs.

- Long-Range Dependencies: Attention captures distant token relationships.

- Positional Encodings: These preserve sequence order.

These features enhance scalability and performance in tasks like translation.

Question 18: What is overfitting, and how can it be mitigated in LLMs?

Overfitting occurs when a model memorizes training data, failing to generalize. Mitigation includes:

- Regularization: L1/L2 penalties simplify models.

- Dropout: Randomly disables neurons during training.

- Early Stopping: Halts training when validation performance plateaus.

These techniques ensure robust generalization to unseen data.

Question 19: What are generative versus discriminative models in NLP?

Generative models, like GPT, model joint probabilities to create new data, such as text or images. Discriminative models, like BERT for classification, model conditional probabilities to distinguish classes, e.g., sentiment analysis. Generative models excel in creation, while discriminative models focus on accurate classification.

Question 20: How does GPT-4 differ from GPT-3 in features and applications?

GPT-4 surpasses GPT-3 with:

- Multimodal Input: Processes text and images.

- Larger Context: Handles up to 25,000 tokens versus GPT-3’s 4,096.

- Enhanced Accuracy: Reduces factual errors through better fine-tuning.

These improvements expand its use in visual question answering and complex dialogues.

Question 21: What are positional encodings, and why are they used?

Positional encodings add sequence order information to transformer inputs, as self-attention lacks inherent order awareness. Using sinusoidal functions or learned vectors, they ensure tokens like “king” and “crown” are interpreted correctly based on position, critical for tasks like translation.

Question 22: What is multi-head attention, and how does it enhance LLMs?

Multi-head attention splits queries, keys, and values into multiple subspaces, allowing the model to focus on different aspects of the input simultaneously. For example, in a sentence, one head might focus on syntax, another on semantics. This improves the models ability to capture complex patterns.

Question 23: How is the softmax function applied in attention mechanisms?

The softmax function normalizes attention scores into a probability distribution:

In attention, it converts raw similarity scores (from query-key dot products) into weights, emphasizing relevant tokens. This ensures the model focuses on contextually important parts of the input.

Question 24: How does the dot product contribute to self-attention?

In self-attention, the dot product between query (Q) and key (K) vectors computes similarity scores:

High scores indicate relevant tokens. While efficient, its quadratic complexity (O(n² )) for long sequences has spurred research into sparse attention alternatives.

Question 25: Why is cross-entropy loss used in language modeling?

Cross-entropy loss measures the divergence between predicted and true token probabilities:

It penalizes incorrect predictions, encouraging accurate token selection. In language modeling, it ensures the model assigns high probabilities to correct next tokens, optimizing performance.

Question 26: How are gradients computed for embeddings in LLMs?

Gradients for embeddings are computed using the chain rule during backpropagation:

These gradients adjust embedding vectors to minimize loss, refining their semantic representations for better task performance.

Question 27: What is the Jacobian matrix’s role in transformer backpropagation?

The Jacobian matrix captures partial derivatives of outputs with respect to inputs. In transformers, it helps compute gradients for multidimensional outputs, ensuring accurate updates to weights and embeddings during backpropagation, critical for optimizing complex models.

Question 28: How do eigenvalues and eigenvectors relate to dimensionality reduction?

Eigenvectors define principal directions in data, and eigenvalues indicate their variance. In techniques like PCA, selecting eigenvectors with high eigenvalues reduces dimensionality while retaining most variance, enabling efficient data representation for LLMs’ input processing.

Question 29: What is KL divergence, and how is it used in LLMs?

KL divergence quantifies the difference between two probability distributions:

In LLMs, it evaluates how closely model predictions match true distributions, guiding fine-tuning to improve output quality and alignment with target data.

Question 30: What is the derivative of the ReLU function, and why is it significant?

The ReLU function,f(x) =max(0,x), has a derivative:

Its sparsity and non-linearity prevent vanishing gradients, making ReLU computationally efficient and widely used in LLMs for robust training.

Question 31: How does the chain rule apply to gradient descent in LLMs?

The chain rule computes derivatives of composite functions:

In gradient descent, it enables backpropagation to calculate gradients layer by layer, updating parameters to minimize loss efficiently across deep LLM architectures.

Question 32: How are attention scores calculated in transformers?

Attention scores are computed as:

The scaled dot product measures token relevance, and softmax normalizes scores to focus on key tokens, enhancing context-aware generation in tasks like summarization.

Question 33: How does Gemini optimize multimodal LLM training?

Gemini enhances efficiency via:

- Unified Architecture: Combines text and image processing for parameter efficiency.

- Advanced Attention: Improves cross-modal learning stability.

- Data Efficiency: Uses self-supervised techniques to reduce labeled data needs.

These features make Gemini more stable and scalable than models like GPT-4.

Question 34: What types of foundation models exist?

Foundation models include:

- Language Models: BERT, GPT-4 for text tasks.

- Vision Models: ResNet for image classification.

- Generative Models: DALL-E for content creation.

- Multimodal Models: CLIP for text-image tasks.

These models leverage broad pretraining for diverse applications.

Question 35: How does PEFT mitigate catastrophic forgetting?

Parameter-Efficient Fine-Tuning (PEFT) updates only a small subset of parameters, freezing the rest to preserve pretrained knowledge. Techniques like LoRA ensure LLMs adapt to new tasks without losing core capabilities, maintaining performance across domains.

Question 36: What are the steps in Retrieval-Augmented Generation (RAG)?

RAG involves:

- Retrieval: Fetching relevant documents using query embeddings.

- Ranking: Sorting documents by relevance.

- Generation: Using retrieved context to generate accurate responses.

RAG enhances factual accuracy in tasks like question answering.

Question 37: How does Mixture of Experts (MoE) enhance LLM scalability?

MoE uses a gating function to activate specific expert sub-networks per input, reducing computational load. For example, only 10% of a model’s parameters might be used per query, enabling billion-parameter models to operate efficiently while maintaining high performance.

Question 38: What is Chain-of-Thought (CoT) prompting, and how does it aid reasoning?

CoT prompting guides LLMs to solve problems step-by-step, mimicking human reasoning. For example, in math problems, it breaks down calculations into logical steps, improving accuracy and interpretability in complex tasks like logical inference or multi-step queries.

Question 39: How do discriminative and generative AI differ?

Discriminative AI, like sentiment classifiers, predicts labels based on input features, modeling conditional probabilities. Generative AI, like GPT, creates new data by modeling joint probabilities, suitable for tasks like text or image generation, offering creative flexibility.

Question 40: How does knowledge graph integration improve LLMs?

Knowledge graphs provide structured, factual data, enhancing LLMs by:

- Reducing Hallucinations: Verifying facts against the graph.

- Improving Reasoning: Leveraging entity relationships.

- Enhancing Context: Offering structured context for better responses.

This is valuable for question answering and entity recognition.

Question 41: What is zero-shot learning, and how do LLMs implement it?

Zero-shot learning allows LLMs to perform untrained tasks using general knowledge from pretraining. For example, prompted with “Classify this review as positive or negative,” an LLM can infer sentiment without task-specific data, showcasing its versatility.

Question 42: How does Adaptive Softmax optimize LLMs?

Adaptive Softmax groups words by frequency, reducing computations for rare words. This lowers the cost of handling large vocabularies, speeding up training and inference while maintaining accuracy, especially in resource-limited settings.

Question 43: How do transformers address the vanishing gradient problem?

Transformers mitigate vanishing gradients via:

- Self-Attention: Avoiding sequential dependencies.

- Residual Connections: Allowing direct gradient flow.

- Layer Normalization: Stabilizing updates.

These ensure effective training of deep models, unlike RNNs.

Question 44: What is few-shot learning, and what are its benefits?

Few-shot learning enables LLMs to perform tasks with minimal examples, leveraging pretrained knowledge. Benefits include reduced data needs, faster adaptation, and cost efficiency, making it ideal for niche tasks like specialized text classification.

Question 45: How would you fix an LLM generating biased or incorrect outputs?

To address biased or incorrect outputs:

- Analyze Patterns: Identify bias sources in data or prompts.

- Enhance Data: Use balanced datasets and debiasing techniques.

- Fine-Tune: Retrain with curated data or adversarial methods.

These steps improve fairness and accuracy.

Question 46: How do encoders and decoders differ in transformers?

Encoders process input sequences into abstract representations, capturing context. Decoders generate outputs using encoder outputs and prior tokens. In translation, the encoder understands the source, and the decoder produces the target language, enabling effective Seq2Seq tasks.

Question 47: How do LLMs differ from traditional statistical language models?

LLMs use transformer architectures, massive datasets, and unsupervised pretraining, unlike statistical models (e.g., N-grams) that rely on simpler, supervised methods. LLMs handle long-range dependencies, contextual embeddings, and diverse tasks, but require significant computational resources.

Question 48: What is a hyperparameter, and why is it important?

Hyperparameters are preset values, like learning rate or batch size, that control model training. They influence convergence and performance; for example, a high learning rate may cause instability. Tuning hyperparameters optimizes LLM efficiency and accuracy.

Question 49: What defines a Large Language Model (LLM)?

LLMs are AI systems trained on vast text corpora to understand and generate human-like language. With billions of parameters, they excel in tasks like translation, summarization, and question answering, leveraging contextual learning for broad applicability.

Question 50: What challenges do LLMs face in deployment?

LLM challenges include:

- Resource Intensity: High computational demands.

- Bias: Risk of perpetuating training data biases.

- Interpretability: Complex models are hard to explain.

- Privacy: Potential data security concerns.

Addressing these ensures ethical and effective LLM use.

Conclusion

It took me a long time — and way too many late-night rabbit holes — to realize that most LLM interview prep doesn’t need to be this chaotic.

You don’t need 30 tabs open.

You don’t need to memorize every obscure paper.

You just need to understand the fundamentals clearly, know where to go deep, and focus on concepts that actually show up in real conversations and systems.

This guide is the resource I wish I had when I started. Not a brain dump, not fluff — just the core questions and practical explanations that help you sound like someone who gets it.

Whether you’re aiming to land a new role, sharpen your skills, or just make sense of the LLM noise, this is your foundation.

Keep building on it — and good luck out there.

Want to hear from me more often?

👉 Connect with me on LinkedIn!

I share daily actionable insights, tips, and updates to help you avoid costly mistakes and stay ahead in the AI world. Follow me here:

Are you a tech professional looking to grow your audience through writing?

My Tech Audience Accelerator is packed with actionable copywriting and audience building strategies that have helped hundreds of professionals stand out and accelerate their growth. Subscribe now to stay in the loop.