Learn the Model Context Protocol hands-on by building a simple Story Manager that Claude can interact with no prior experience needed

Why I Built This (And Why You Should Care)

When Anthropic released the Model Context Protocol (MCP) , I was intrigued but confused. The documentation was great, but I learn best by doing. So I built SimpleMCP a minimal, educational project that demonstrates how MCP actually works under the hood.

In this tutorial, I'll walk you through building your own MCP server from scratch. You'll create a "Story Manager" tool that lets Claude create, read, update, and delete stories. By the end, you'll understand how AI assistants communicate with external tools and can build your own integrations.

What makes this different from other MCP tutorials?

- We'll build two transport layers (STDIO and HTTP/SSE)

- You'll see clean architecture patterns that separate business logic from protocols

- Everything is runnable clone the GitHub repo and follow along

- No production complexity just the core concepts

Let's dive in!

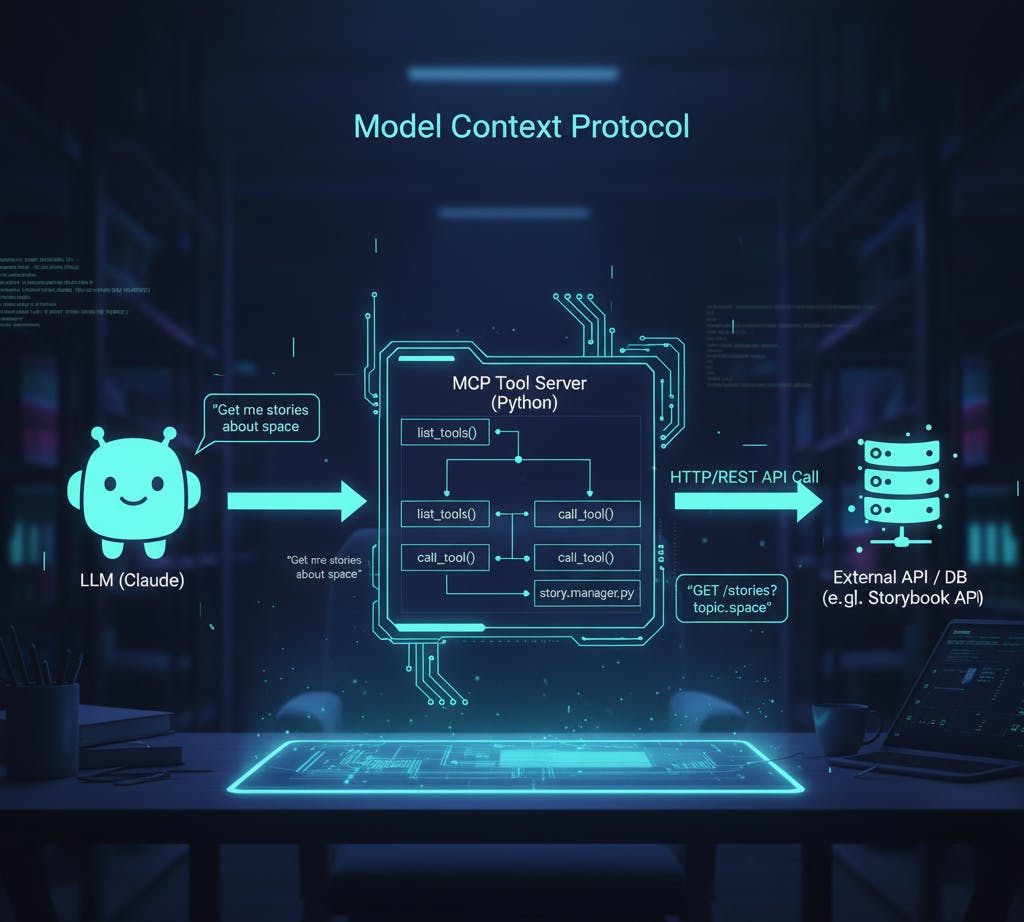

What is MCP? (The 5-Minute Primer)

The Model Context Protocol is like USB-C for AI applications. Before USB-C, every device had a different connector. Before MCP, every AI tool integration was custom-built.

MCP provides a standardized way for AI assistants (like Claude) to communicate with external tools, databases, APIs, and services.

Here's how it works:

┌─────────────────────────┐

│ Claude (AI) │

│ "Tell me a story" │

└───────────┬─────────────┘

│

│ MCP Protocol

│ (JSON-RPC 2.0)

│

┌───────▼────────┐

│ MCP Server │

│ (Your Tool) │

└───────┬────────┘

│

┌───────▼─────────┐

│ Business Logic │

│ Story Manager │

└─────────────────┘

Core MCP Concepts:

- Tools: Functions that Claude can call (like

list_stories,get_story) - Resources: Data that Claude can read (like files or database records)

- Prompts: Pre-written templates Claude can use

- Transports: How the data flows (STDIO or HTTP/SSE)

In this tutorial, we'll focus on Tools and Transports since they're the foundation.

What We're Building: A Story Manager

Our MCP server will manage a collection of children's stories. Claude will be able to:

- List all stories (

list_storiestool) - Read a specific story (

get_storytool)

Simple, right? But this demonstrates everything you need to know about MCP.

Project Structure:

SimpleMCP/

├── story_manager.py # Business logic (transport-agnostic)

├── mcp_server.py # STDIO transport implementation

├── mcp_http_server.py # HTTP/SSE transport implementation

├── stories/

│ └── stories.json # Sample story data

└── tests/ # Tests for our implementation

The key architectural decision here is separating business logic from transport. The story_manager.py file has no idea whether it's being called via STDIO or HTTP it just manages stories. This makes testing easier and lets us add new transports without touching our core logic.

Step 1: Building the Business Logic

Let's start with the foundation the story_manager.py module that handles all story operations.

story_manager.py (simplified for clarity):

import json

from typing import Dict, List

# Module-level cache for loaded stories

_stories_cache = None

def load_stories(file_path: str) -> dict:

"""Load stories from a JSON file."""

with open(file_path, 'r', encoding='utf-8') as f:

data = json.load(f)

if 'stories' not in data:

raise ValueError("Invalid JSON: missing 'stories' key")

return data

def list_stories() -> List[Dict[str, str]]:

"""Get a list of all available stories."""

global _stories_cache

if _stories_cache is None:

_stories_cache = load_stories()

# Extract just id and title

return [

{'id': story['id'], 'title': story['title']}

for story in _stories_cache['stories']

]

def get_story(story_id: str) -> Dict[str, str]:

"""Retrieve a complete story by its ID."""

global _stories_cache

if _stories_cache is None:

_stories_cache = load_stories()

# Search for the story

for story in _stories_cache['stories']:

if story['id'] == story_id:

return {

'id': story['id'],

'title': story['title'],

'text': story['text']

}

raise KeyError(f"Story '{story_id}' not found")

Why this design?

- Caching: Stories are loaded once and cached for performance

- Clear API: Simple functions with clear inputs and outputs

- Error handling: Explicit exceptions for missing stories

- Transport-agnostic: No MCP code here just pure Python

This is the pattern I recommend: keep your business logic separate from your protocol layer. It makes everything easier to test, debug, and extend.

Step 2: Creating a STDIO Transport Server

Now let's wrap our business logic in an MCP server. STDIO (Standard Input/Output) is the simplest transport perfect for local desktop applications like Claude Desktop.

mcp_server.py (core parts):

from mcp.server import Server

from mcp.server.stdio import stdio_server

from mcp.types import Tool, TextContent

import story_manager

# Create the MCP server instance

app = Server("story-teller")

@app.list_tools()

async def list_tools() -> list[Tool]:

"""Tell MCP clients what tools we provide."""

return [

Tool(

name="list_stories",

description="List all available stories. Returns id and title.",

inputSchema={

"type": "object",

"properties": {},

"required": []

}

),

Tool(

name="get_story",

description="Get a story by ID. Returns complete story text.",

inputSchema={

"type": "object",

"properties": {

"story_id": {

"type": "string",

"description": "Story ID (e.g., 'squirrel_and_owl')",

"enum": [

"squirrel_and_owl",

"bear_loses_roar",

"turtle_wants_to_fly",

"lonely_firefly",

"rabbit_and_carrot"

]

}

},

"required": ["story_id"]

}

)

]

@app.call_tool()

async def call_tool(name: str, arguments: dict) -> list[TextContent]:

"""Handle tool calls from MCP clients."""

if name == "list_stories":

stories = story_manager.list_stories()

return [TextContent(

type="text",

text=json.dumps(stories, indent=2)

)]

elif name == "get_story":

story_id = arguments["story_id"]

story = story_manager.get_story(story_id)

story_text = f"""# {story['title']}

{story['text']}

---

Story ID: {story['id']}

"""

return [TextContent(type="text", text=story_text)]

else:

return [TextContent(

type="text",

text=f"Error: Unknown tool '{name}'"

)]

async def main():

"""Run the server using STDIO transport."""

async with stdio_server() as (read_stream, write_stream):

await app.run(

read_stream,

write_stream,

app.create_initialization_options()

)

if __name__ == "__main__":

import asyncio

asyncio.run(main())

Key concepts here:

- Tool Discovery (

list_tools): Clients ask "what can you do?" and we respond with tool definitions - Input Schemas: We use JSON Schema to define what parameters each tool accepts

- Tool Execution (

call_tool): When Claude calls a tool, we route it to the right function - STDIO Transport: We use

stdio_server()to communicate via stdin/stdout

The MCP protocol flow:

Client (Claude) MCP Server (You)

│ │

├──── initialize ────────>│

│<───── capabilities ─────┤

│ │

├──── list_tools ────────>│

│<───── tool list ────────┤

│ │

├──── call_tool ─────────>│

│ (list_stories) │

│<───── result ───────────┤

│ │

Step 3: Creating an HTTP/SSE Transport Server

STDIO works great for local applications, but what if you want to access your MCP server over a network? That's where HTTP with Server-Sent Events (SSE) comes in.

mcp_http_server.py (using FastMCP for simplicity):

from mcp.server.fastmcp import FastMCP

import story_manager

# Initialize FastMCP server

mcp = FastMCP(name="story-teller-http")

@mcp.tool()

async def list_stories() -> str:

"""

List all available stories.

Returns JSON array of stories with 'id' and 'title' fields.

"""

stories = story_manager.list_stories()

return json.dumps(stories, indent=2)

@mcp.tool()

async def get_story(story_id: str) -> str:

"""

Get a specific story by its ID.

Args:

story_id: Story identifier (e.g., 'squirrel_and_owl')

Returns:

Formatted story text with title and content.

"""

story = story_manager.get_story(story_id)

return f"""# {story['title']}

{story['text']}

---

Story ID: {story['id']}

"""

if __name__ == "__main__":

# Run on http://localhost:8000/sse

mcp.run(transport="sse")

What's different here?

- FastMCP: A higher-level wrapper that handles HTTP/SSE details

- Decorator-based: Tools are defined with

@mcp.tool()decorators - Type hints: FastMCP automatically generates schemas from Python type hints

- Same business logic: We still call the same

story_managerfunctions

HTTP/SSE vs STDIO:

|

Feature |

STDIO |

HTTP/SSE |

|---|---|---|

|

Use case |

Local desktop apps |

Web apps, remote access |

|

Connection |

Process stdin/stdout |

HTTP endpoint |

|

Discovery |

Via config file |

Via URL |

|

Latency |

Very low |

Network latency |

|

Examples |

Claude Desktop, Claude Code |

Web integrations, APIs |

Step 4: Seeing It In Action

Let's test our STDIO server with Claude Desktop.

1. Configure Claude Desktop:

Add this to your Claude Desktop config (~/Library/Application Support/Claude/claude_desktop_config.json on macOS):

{

"mcpServers": {

"story-teller": {

"command": "python",

"args": ["/absolute/path/to/SimpleMCP/mcp_server.py"]

}

}

}

2. Restart Claude Desktop

3. Try these prompts:

- "What stories are available?"

- "Tell me the story about the squirrel and owl"

- "Show me all story titles"

Claude will use your MCP server to retrieve stories!

What's happening under the hood:

You: "What stories are available?"

│

▼

Claude: [Thinks] I should use the list_stories tool

│

▼

MCP Protocol: call_tool(name="list_stories", arguments={})

│

▼

Your Server: story_manager.list_stories()

│

▼

Response: [{"id": "squirrel_and_owl", "title": "..."}, ...]

│

▼

Claude: "Here are the available stories: ..."

Step 5: Testing the HTTP/SSE Server

For the HTTP server, start it manually:

python mcp_http_server.py

You'll see:

================================================================================

SimpleMCP HTTP/SSE Server v1.0.0

================================================================================

Server Name: story-teller-http

Transport: SSE (Server-Sent Events)

Host: 0.0.0.0

Port: 8000

SSE Endpoint: http://localhost:8000/sse

Available Tools:

- list_stories: List all available stories

- get_story: Get a specific story by ID

Press Ctrl+C to stop the server

================================================================================

Now you can connect to it from any MCP client that supports HTTP/SSE by pointing to http://localhost:8000/sse.

Understanding the Differences: When to Use Which Transport

Use STDIO when:

- ✅ Building for local desktop applications (Claude Desktop, Cursor)

- ✅ You want minimal latency

- ✅ You don't need network access

- ✅ You're just learning MCP

Use HTTP/SSE when:

- ✅ Building web applications

- ✅ You need remote access to your MCP server

- ✅ Multiple clients need to connect simultaneously

- ✅ You're building a service/API

In my SimpleMCP project, I built both to demonstrate the concepts. In real projects, you'd typically choose one based on your use case.

What You Just Learned

Let's recap what we've covered:

- MCP fundamentals: Tools, resources, prompts, and transports

- Clean architecture: Separating business logic from protocol layers

- STDIO transport: For local desktop integrations

- HTTP/SSE transport: For network-based integrations

- Tool definitions: How to tell Claude what your tool does

- Real integration: Connecting to Claude Desktop

The pattern is always the same:

Business Logic → MCP Wrapper → Transport Layer → Claude

Next Steps: Build Your Own

Now that you understand the fundamentals, here are ideas to extend this:

- Add CRUD operations: Implement

create_story,update_story,delete_story - Different domain: Replace stories with todos, notes, or recipes

- Add resources: Let Claude browse story collections

- Add prompts: Create pre-written prompts for common story requests

- Connect to a database: Replace JSON files with SQLite or PostgreSQL

- Add authentication: Secure your HTTP/SSE server

The Full Code

Everything we've discussed is available in the SimpleMCP GitHub repository:

git clone https://github.com/dondetir/simpleMCP.git

cd simpleMCP

python3 -m venv .venv

source .venv/bin/activate

pip install -r requirements.txt

pytest tests/ # Run tests

python mcp_server.py # Or python mcp_http_server.py

The repo includes:

- Complete working code

- Unit and integration tests

- Example configurations

- Detailed setup instructions

Final Thoughts

The Model Context Protocol is still young (released in late 2024), but it's already changing how we build AI integrations. Companies like GitHub, Slack, and Cloudflare have adopted it.

What excites me most is the composability. Once you understand the pattern, you can build MCP servers for anything:

- Your personal knowledge base

- IoT device control

- Database queries

- API integrations

- File system access

The key insight is this: MCP isn't about AI it's about building standardized tool interfaces that happen to work great with AI.

If you build something cool with MCP, I'd love to hear about it! Drop a comment or open an issue on the GitHub repo.

Happy building! 🚀