Today, we are going to discuss a

method proposed by researchers from four institutions one of which is ByteDance AI Lab (known for their TikTok App). They give us a new method termed

Sparse R-CNN (not confuse with Sparse R-CNN that works with sparse convolutions on 3D computer vision tasks such

that) that achieve near state-of-the-art performance in object detection and uses completely sparse and learnable bounding boxes generation.

Let’s start with a short overview of existing detecting methods.

Dense method

One of the widely used pipelines is the

one-stage detector, which directly predicts the label and location of anchor boxes densely covering spatial positions, scales, and aspect ratios in a single-shot way. For example

SSD or

YOLO.

Let’s consider the YOLO algorithm. Ultimately, it aims to predict a class of an object on the image and the bounding box specifying object location. Each bounding box can be described using four descriptors:

- center of a bounding box (bx, by)

- width (bw)

- height (bh)

- value c is corresponding to a class of an object (such as: car, traffic lights, etc.).

In addition, we have to predict the pc value, which is the probability that there is an object in the bounding box.

It is a dense method because it is not searching for interesting regions in the given image that could potentially contain an object. Instead, YOLO is splitting the image into cells, using 19×19 grid. But in general one-stage detector could produce W×H cells, one per pixel. Each cell is responsible for predicting k bounding boxes (for this example k is chosen as 5). Therefore, we arrive at a large number of W×H×k bounding boxes for one image.

For each cell in the grid and each bbox mentioned above values are produced (source) Dense-To-Sparse Method

There are two-stage detectors that piggy-backs on dense proposals generated using RPN like the

Faster R-CNN paper proposed. These detectors have dominated modern object detection for years.

Two-stage detector architecture (source) Proposals are obtained in a similar way as in one-stage detectors, but instead of predicting the class of object directly, it predicts objectness probability. After that, the second stage predicts classes filtered by objectness and overlap score bounding boxes.

Sparse Method

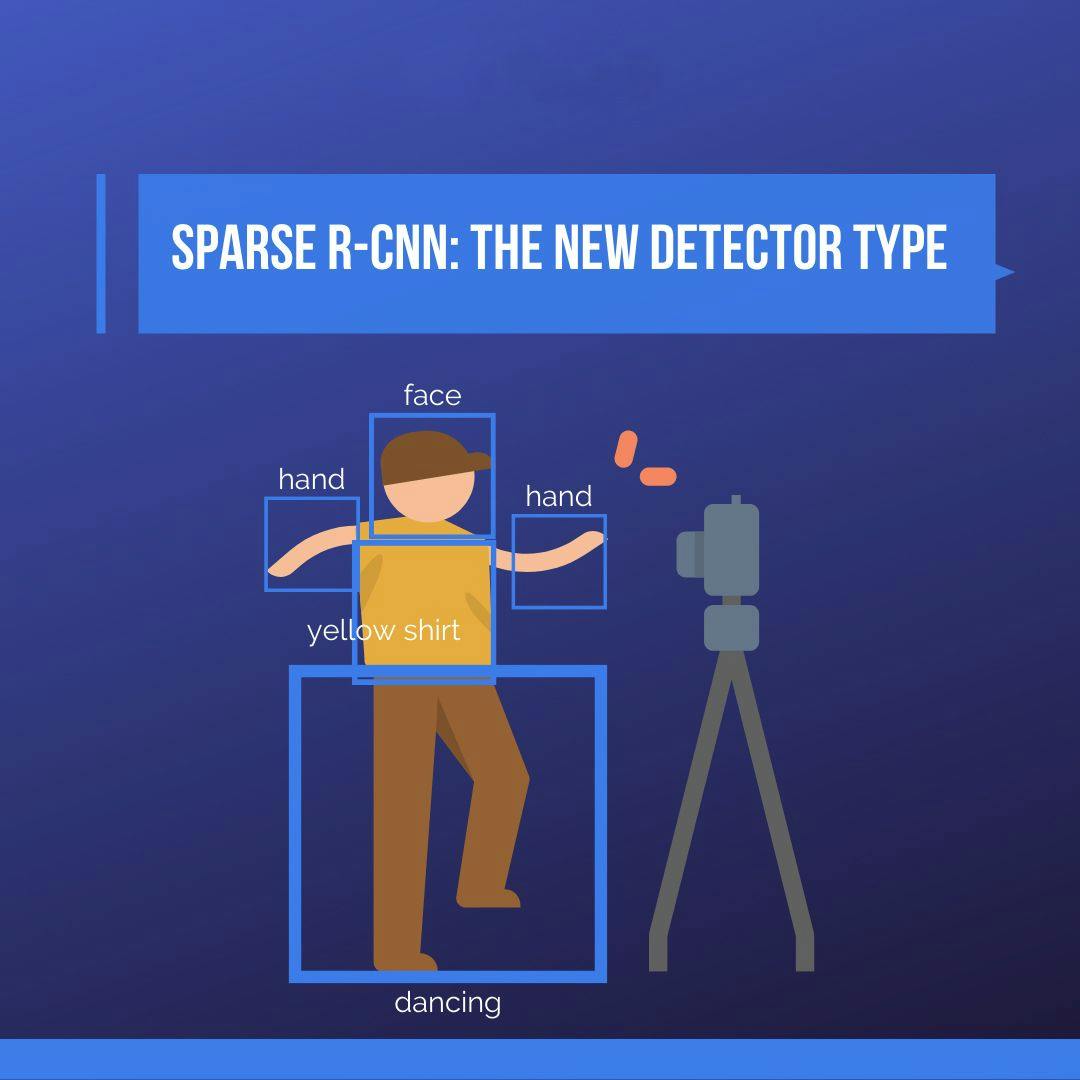

Authors of this paper categorize a paradigm of their new Sparse R-CNN as the extension of the existing object detector paradigm which includes from thoroughly dense to dense-to-sparse with a new step which leads to thoroughly sparse.

Model architectures comparison (source) In the reviewed paper using RPN is avoided and replaced with a small set of proposal boxes (e.g. 100 per image). These boxes are obtained using the learnable

proposal boxes part and

proposal features part of the network. The formal predicts

4 values

(x,y,h,w) per proposal and the latter predicts latent representation vector of length 256 of each bbox contents. Learned proposal boxes are used as a reasonable statistic to perform refining steps afterward and learned proposal features used to introduce attention mechanism. This mechanism is very similar to one that is used in the

DETR paper.

These manipulations are performed inside the dynamic instance interactive head that we will cover in the following section.

Proposed Model Features

As the name of the paper implies, this model is end-to-end. The architecture is elegant. It consists of FPN based backbone that acquires features from images, mentioned above learnable proposal boxes and proposal features, and a dynamic instance interactive head which is the main contribution to neural nets architecture of this very paper.

Dynamic instance interactive head

Main Result

The authors provide several comparison tables that show the performance of a new method. Sparse R-CNN is compared to RetinaNet, Faster R-CNN, and DETR in two variations with ResNet50 and ResNet100.

Here we can see that Sparse R-CNN outperforms RetinaNet and Faster R-CNN in both R50 and R100, but it performs quite similarly to DETR based architectures.

On that image, you can see the qualitative result of model inference on the COCO Dataset. In the first column learned proposal boxes are shown, they are predicted for any new image. In the next columns, you can see final bboxes that were refined from proposals. They differ depending on the stage in the iterative learning process.

Show me the code!

In conclusion, I would like to say that now in 2020 we saw a lot of papers that apply transformers to images. Transformers have proved their worth in fields of NLP and now they gradually enter the scene of image processing. This paper shows us that using transformers it is possible to create fast one-stage detectors that perform comparable in terms of quality to the best, for now, two-stage ones.

References