Table of Links

2 Related Work

2.2 Creativity Support Tools for Animation

2.3 Generative Tools for Design

4 Logomotion System and 4.1 Input

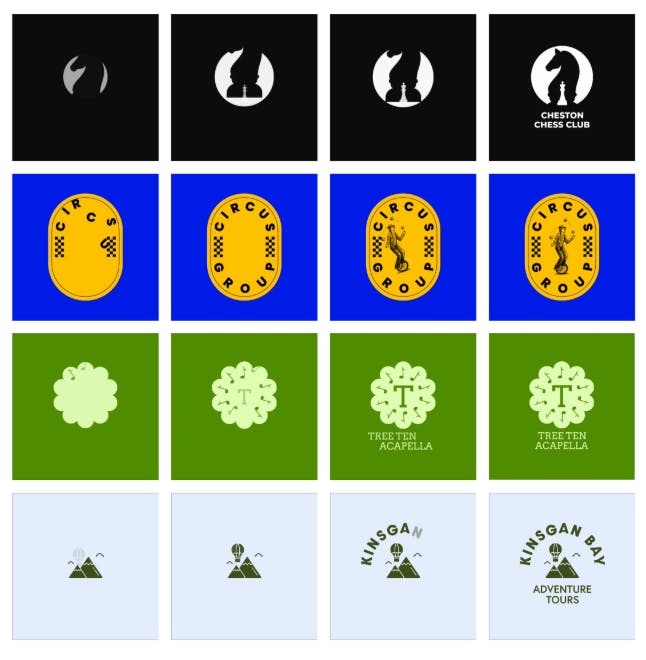

4.2 Preprocess Visual Information

4.3 Visually-Grounded Code Synthesis

5.1 Evaluation: Program Repair

7 Discussion and 7.1 Breaking Away from Templates

7.2 Generating Code Around Visuals

4.3 Visually-Grounded Code Synthesis

The HTML, design concept, and initial image are then passed as inputs to the code synthesis stage. We use GPT-4-V to implement a code timeline using anime.js, a popular Javascript library for animation [11]. While we chose to use anime.js, the generated code manipulated elements through their transforms (position, scale, rotation), easing curves, and keyframes, which are abstractions that generalize across many libraries relevant to code-generated visuals (e.g. CSS). This was the prompt we used to generate animations.

Implement an anime . js timeline that animates this image,

which is provided in image and HTML form below.

< HTML >

Here is a suggested concept for the animated logo :

< DESIGN CONCEPT >

Return code in the following format .

''' javascript

timeline

. add ({{

// Return elements to their original positions

// Make sure to use from - to format

// Use -512 px and 512 px to bring elements in from offscreen.

}}' ' '

This animation timeline was then executed by using Selenium, a browser automation software that automatically drives a Chrome browser.

4.3.1 Visually Grounded Program Repair. A necessary stage after program synthesis is program repair. AI-generated code is imperfect and prone to issues with compilation and unintended side effects. Additionally, because our approach generates animations for every element, multiple animations have to compile and execute properly. This increases the chances that there will be at least one point of failure in the final animation. To prevent these errors, we introduce a mechanism for visually-grounded program repair to fix “visual bugs" for when elements do not return to their original positions after the animation. We detected these bugs by calculating differences between the bounding boxes between the last frame in our timeline and the target layout. Specifically, we checked for correctness in left, top, width, height, and opacity. This allowed us to check for issues in position, scale, and opacity directly and rotation indirectly.

We next handled bug fixing in a layer-wise fashion. The inputs to this step were: the element that was detected to have an issue (its element ID), the animation code, and two images representing the element at its target layout and where it actually ended in the last frame. GPT-4-V was then prompted to return an isolated code snippet describing the issue and a corrected code solution. This code snippet was then merged back into the original code using another GPT-4-V call. We checked the bounding boxes of images and text elements in a layerwise fashion. This reduced the amount of information the LLM had to process and allowed it to more easily visually analyze the difference between the two images. Once the bug fixes were complete, we generated the final output of LogoMotion: an HTML page with JavaScript code written with anime.js. An overview of the inputs and outputs of this stage is illustrated in Figure 4.

4.3.2 Interactive Editing. LogoMotion is a prompt-based generative approach. Within the operators, there are many places where design norms for different formats could change. For example, the duration of the animation, the level of detail described by the design concept, and the different kinds of groupings that can be made over a layout (e.g. group by proximity, group by visual similarity, group by regions of the canvas) are all free variables that could be considered high-level controls. To explore how users engage with these high-level controls, we also present users the possibility to interactively iterate upon these automatically generated logos. Interactive editing exposes a simple prompt to users which allows them to describe how they want the animation to change. Similar to the visual debugging stage, GPT-4-V converts this requested change (this time user-provided) into an isolated portion of code, which is merged back into the original timeline. The benefit of having the previous version of animation code to merge into means that users do not have to respecify how the other elements move.

Authors:

(1) Vivian Liu, Columbia University (vivian@cs.columbia.edu);

(2) Rubaiat Habib Kazi, Adobe Research (rhabib@adobe.com);

(3) Li-Yi Wei, Adobe Research (lwei@adobe.com);

(4) Matthew Fisher, Adobe Research (matfishe@adobe.com);

(5) Timothy Langlois, Adobe Research (tlangloi@adobe.com);

(6) Seth Walker, Adobe Research (swalker@adobe.com);

(7) Lydia Chilton, Columbia University (chilton@cs.columbia.edu).

This paper is