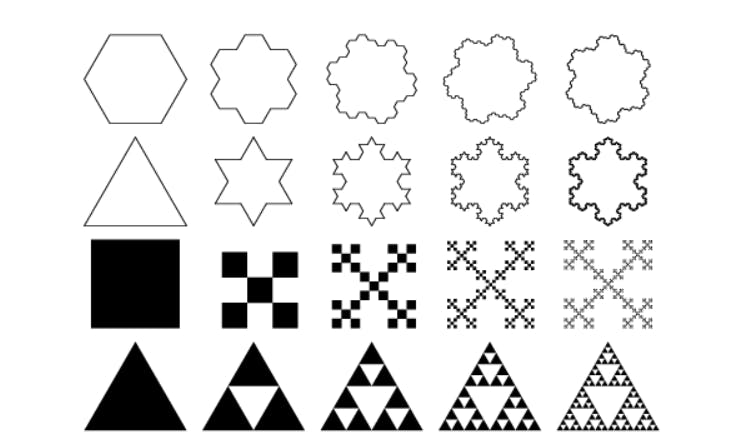

Fractal Designs, Simple to Complex (Source)

Complex systems emerge from simple parts.

Bits form bytes, letters form words, and basic arithmetic builds into entire mathematical models. In software, microservices become platforms like Netflix. This pattern, small components combining into powerful wholes, is the foundation of complexity itself.

The great gift to the world that technologies like Apache Kafka gave us was decoupling: it broke monoliths into independent services and teams that could scale.Kafka didn’t just change how data moved, it reshaped how we design systems: modular, loosely coupled, and easier to evolve.

Multi-agent systems (MAS) offer a similar shift for AI.

MAS introduces a way of structuring intelligence, breaking down complex AI tasks into simpler, focused agents that work together to solve harder problems. It’s a design shift that brings clear benefits: better testing, more reliable behavior, and scalable systems.

Generative AI often suffers from the opposite problem: too much complexity packed into a single prompt. In this post, we’ll explore how MAS -- along with two other concepts that are important here, emergence, decomposition -- offer a better path for building intelligent, reliable AI systems.

Emergence: Complexity from Simplicity

The Biological Levels of Organization From an Atom to a Multicellular Organism (Source:

We see this bottom-up complexity in countless domains.

When I first studied

Tetromino Patterns from Conway’s Game of Life (Source:

With only four simple rules, you could simulate behaviors that looked like movement, reproduction, and even computation itself.

That lesson stuck with me: you don’t need to engineer complexity directly. You can build small parts with clear behaviors and let them interact. Complexity emerges.

Another example is ant colonies. Each ant follows basic instructions: lay pheromones, follow trails, pick up food. There’s no leader, no central coordination, yet the colony exhibits intelligent, adaptive behavior. It organizes itself.

Ant Colony Behavior (Source:

This is the essence of emergence. Intelligence isn’t always about the smartest part, it’s about the smartest system. This is how we need to think about AI and this is the principle that multi-agent systems bring to AI.

Instead of building one all-knowing model, MAS encourages you to design multiple simple agents, each focused on a narrow task. Like ants or Conway’s gliders, they interact to form a system that can solve problems much larger than any one part could handle alone.

The Trouble with Generative AI

Generative AI is powerful, but it can also be chaotic. The same foundation model that can summarize a document can also write code, explain a joke, or draft an email. That flexibility is impressive, and it’s in part what drives the interest but it also introduces a problem: we’ve packed too much complexity into a single prompt-shaped interface.

This is the opposite of how purpose-built AI models are designed.

Traditional models are scoped: fraud detection, recommendation, and churn prediction. The inputs and outputs are known, the evaluation metrics are clear, and testing is straightforward. They lack generality, but that’s exactly what makes them reliable, testable, and deployable at scale.

Generative AI flips this.

It's like giving an employee fifteen unrelated tasks at once, with no prioritization, no clear inputs, and no defined outputs. It’s hard to evaluate their work, not because they’re bad at it, but because we’ve defined the problem in a way that resists structure.

From a systems perspective, this is the opposite of emergence.

Instead of building complexity through interaction between simple components, we’re injecting complexity all at once and hoping for coherence. It’s brittle, hard to debug, and nearly impossible to test reliably.

Generative AI systems suffer from:

- Testing difficulties – The input space is infinite, and the output space is open-ended. How do you assert correctness in a system that can say anything?

- Poor predictability of outputs – Small changes to prompts can produce wildly different results. You don’t know what you're going to get. This makes designing and testing these systems more challenging than their conventional alternatives.

- Scalability limits – Complex overloaded prompts don’t scale well organizationally. Everything becomes entangled in the prompt and the model gets confused.

These challenges aren’t just inconvenient, they make generative AI systems hard to engineer, scale, and trust in production. But there’s another way to approach the problem. A way that embraces complexity through structure, not despite it. One that draws from emergence, modularity, and clear system boundaries.

That’s where multi-agent systems come in.

From Models to Agents

So how do we bring structure back into AI systems? We start thinking in terms of agents.

An agent is more than just a model. It’s a goal-directed entity with memory, tools, and autonomy. Instead of asking a model to respond to a prompt, you give an agent a task and let it decide how to achieve it. It can plan, call APIs, retrieve information, interact with other agents, and adapt based on context.

Agent Architecture (Inspired by

One of the most powerful things agents can do is execute deterministic processes, like querying a database, running a script, or triggering a workflow. Foundation models provide a natural language interface to those actions, removing the friction that used to require specialized knowledge. The deep database expertise of a senior analyst, how to structure the right query, filter by quarter, join across systems, can now be surfaced and reused by a non-technical executive who simply wants to know what’s happening with the revenue forecast.

This is where agents become more than just clever wrappers around LLMs. They can connect probabilistic reasoning (language) with deterministic systems (code and data), a fusion that gives language models real-world leverage.

Even more powerful is when you connect multiple agents together.

That’s the idea behind multi-agent systems: a framework where each agent is designed with a narrow focus, but collectively they coordinate to solve complex problems. Planning, routing, executing, refining, all handled by different agents working in concert.

As

You get modular components with defined responsibilities, interfaces, and scopes. That makes them easier to test, scale, and evolve independently, just like well-designed microservices in software architecture.

And just like in ant colonies or Conway’s Game of Life, the intelligence isn’t embedded in one massive entity. It emerges from the interaction of smaller, purpose-driven units. This is where MAS mirrors nature: small agents following simple rules can collectively generate sophisticated, reliable behavior.

In the next section, we’ll dig into the advantages of this approach and why it might be the most practical path to building complex, trustworthy AI systems.

Why Multi-Agent Systems Work

One of the key conceptual shifts MAS enables is moving from open-world to closed-world problem framing.

A general-purpose foundation model operates in an open world, it can be asked anything, in any way, and is expected to respond appropriately. That’s powerful, but it also makes the system unbounded and unpredictable. In contrast, an agent operates in a closed world: it has a specific goal, access to known tools, and clear success criteria. This boundedness makes agents testable, observable, and composable, exactly what’s needed for real-world reliability.

1. Testability

When each agent has a narrow scope, you can test it like any traditional software component. A retrieval agent can be validated on recall and precision. A summarizer can be benchmarked against expected outputs. You no longer have to treat the system like a black box with infinitely many valid responses, you can probe and improve each piece in isolation.

2. Observability

In a monolithic LLM interaction, you get input, output, and not much else. With MAS, every agent step is visible: what data was fetched, what reasoning was applied, what decision was made. That makes debugging tractable, auditing possible, and failure modes easier to understand and fix.

3. Composability

Agents are building blocks. Once you’ve built a reliable planner or a summarizer, you can reuse them across different workflows. This aligns perfectly with how modern software is built, through reusable, composable services, and opens the door to faster iteration and lower operational overhead.

4. Scalability

Different agents can be developed and maintained by different teams. Each can be versioned, monitored, and deployed independently. That’s crucial in a growing organization or product where responsibilities need to be distributed. You scale the system by scaling the team without increasing the complexity for any single contributor.

5. Alignment with Real-World Constraints

Real-world tasks are not free-form language problems, they’re structured workflows with known inputs, outputs, constraints, and goals. MAS allows us to encode that structure into the system itself. You can insert guardrails, fallback logic, human-in-the-loop checks—all within a coherent architecture. MAS doesn’t eliminate complexity. But it organizes it. It gives you the ability to engineer AI systems with the same rigor and reliability we expect from modern software.

MAS doesn’t eliminate complexity. But it organizes it. It gives you the ability to engineer AI systems with the same rigor and reliability we expect from modern software.

An Example: Revenue Forecasting with MAS

Imagine a company wants to forecast revenue across regions and product lines. Doing this well requires data retrieval, trend analysis, anomaly detection, and narrative reporting. Trying to prompt a single foundation model to do all of that in one shot is brittle and opaque, hard to test, hard to trust, and hard to scale.

With MAS, we decompose the problem:

- A retriever agent pulls sales and financial data from internal systems.

- An analysis agent applies deterministic logic or invokes a forecasting model.

- A QA agent checks for missing data, outliers, or violations of business rules.

- A reporting agent summarizes the results in natural language.

- A planner agent orchestrates the workflow and handles errors or retries.

Each agent is scoped, testable, and reusable.

As a system, it produces a more reliable and explainable result. And because the interface is natural language, a non-technical user can ask a high-level question like “How are we tracking against Q2 targets?”, and get an answer grounded in structured data and deterministic logic.

This approach aligns AI with how businesses operate: modular systems, clear ownership, and verifiable outcomes.

Closing Thoughts

Building intelligent systems isn’t just about having powerful models, it’s about how we structure and compose them. Complex behavior doesn’t require complex parts. It requires well-designed, simple parts working together, guided by clear boundaries and interactions. That’s the essence of emergence and the core design principle behind multi-agent systems.

By breaking down monolithic prompts into smaller, testable agents, we regain control. We shift AI from an experimental black box into an engineered system, observable, scalable, and aligned with real business workflows.

Foundation models gave us a powerful interface. MAS gives us the architectural pattern to make it useful, dependable, and extensible.

The future of AI isn't just bigger models, it's better system design. Good ol’ practical computer science.

Written by Sean Falconer

Sean is an AI Entrepreneur in Residence at Confluent where he works on AI strategy and thought leadership. Sean’s been an academic, startup founder, and Googler. He has published works covering a wide range of topics from AI to quantum computing. Sean also hosts the popular engineering podcasts Software Engineering Daily and Software Huddle. You can connect with Sean on LinkedIn.