Authors:

(1) Xiyu Zhou, School of Computer Science, Wuhan University, Wuhan, China ([email protected]);

(2) Peng Liang (Corresponding Author), School of Computer Science, Wuhan University, Wuhan, China ([email protected]);

(3) Beiqi Zhang, School of Computer Science, Wuhan University, Wuhan, China ([email protected]);

(4) Zengyang Li, School of Computer Science, Central China Normal University, Wuhan, China ([email protected]);

(5) Aakash Ahmad, School of Computing and Communications, Lancaster University Leipzig, Leipzig, Germany ([email protected]);

(6) Mojtaba Shahin, School of Computing Technologies, RMIT University, Melbourne, Australia ([email protected]);

(7) Muhammad Waseem, Faculty of Information Technology, University of Jyväskylä, Jyväskylä, Finland ([email protected]).

Table of Links

2. Methodology and 2.1. Research Questions

3. Results and Interpretation and 3.1. Type of Problems (RQ1)

4. Implications

4.1. Implications for the Copilot Users

4.2. Implications for the Copilot Team

4.3. Implications for Researchers

6. Related Work

6.1. Evaluating the Quality of Code Generated by Copilot

6.2. Copilot’s Impact on Practical Development and 6.3. Conclusive Summary

A B S T R A C T

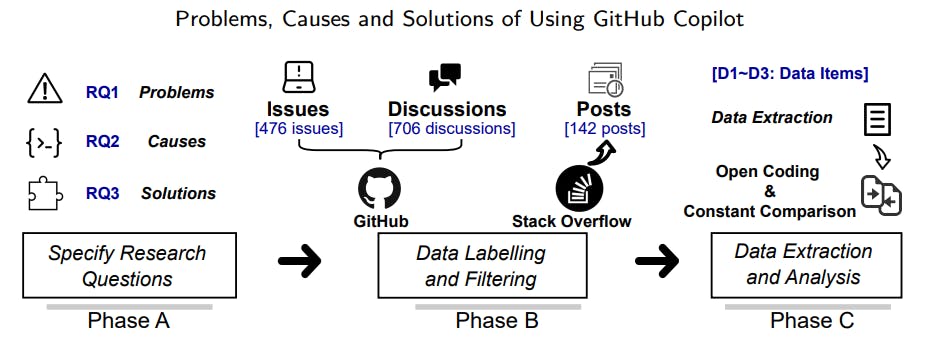

With the recent advancement of Artificial Intelligence (AI) and Large Language Models (LLMs), AIbased code generation tools become a practical solution for software development. GitHub Copilot, the AI pair programmer, utilizes machine learning models trained on a large corpus of code snippets to generate code suggestions using natural language processing. Despite its popularity in software development, there is limited empirical evidence on the actual experiences of practitioners who work with Copilot. To this end, we conducted an empirical study to understand the problems that practitioners face when using Copilot, as well as their underlying causes and potential solutions. We collected data from 476 GitHub issues, 706 GitHub discussions, and 142 Stack Overflow posts. Our results reveal that (1) Operation Issue and Compatibility Issue are the most common problems faced by Copilot users, (2) Copilot Internal Error, Network Connection Error, and Editor/IDE Compatibility Issue are identified as the most frequent causes, and (3) Bug Fixed by Copilot, Modify Configuration/Setting, and Use Suitable Version are the predominant solutions. Based on the results, we discuss the potential areas of Copilot for enhancement, and provide the implications for the Copilot users, the Copilot team, and researchers.

1. Introduction

In software development, developers strive to achieve automation and intelligence that most of the code can be generated automatically with minimal human coding effort. Several studies (e.g., Luan et al. (2019), Robillard et al. (2010)) and software products (e.g., Eclipse Code Recommenders (Eclipse, 2019)) have been dedicated to improving the efficiency of developers through the development of systems that can recommend and generate code. Large Language Models (LLMs) are a type of natural language processing technique based on deep learning that is capable of automatically learning the grammar, semantics, and pragmatics of language, and generating a wide variety of contents. Recently, with the rapid development of LLMs, AI code generation tools trained on large amounts of code snippets are increasingly in the spotlight (e.g., AI-augmented development in Gartner Trends 2024 (Gartner, 2023)), making it possible for programmers to automatically generate code with minimized human effort (Austin et al., 2021).

On June 29, 2021, GitHub and OpenAI jointly announced the launch of a new product named GitHub Copilot (GitHub, 2024c). This innovative tool is powered by OpenAI’s Codex, a large-scale neural network model that is trained on a massive dataset of source code and natural language text. The goal of GitHub Copilot is to provide advanced code autocompletion and generation capabilities to developers, effectively acting as an “AI pair programmer” that can assist with coding tasks in real-time. Copilot has been designed to work with a wide range of Integrated Development Environments (IDEs) and code editors, such as VSCode, Visual Studio, Neovim, and JetBrains (GitHub, 2024c). By collecting contextual information like function names and comments, Copilot is able to generate code snippets in a variety of programming languages (e.g., Python, C++, Java), which can improve developers’ productivity and help them complete coding tasks more efficiently (Imai, 2022).

Since its release, Copilot has gained significant attention within the developer community, and it had a total of 1.3 million paid users till Feb of 2024 (Wilkinson, 2024). Many studies identify the effectiveness and concerns about the potential impact on code security and intellectual property (Pearce et al., 2022) (Bird et al., 2023) (Jaworski and Piotrkowski, 2023). Some prior research investigated the quality of the code generated by Copilot (Yetistiren et al., 2022) (Nguyen and Nadi, 2022), while others examined its performance in practical software development (Imai, 2022) (Barke et al., 2023) (Peng et al., 2023).

However, there is currently a lack of systematic categorization of the problems arise during the practical use of Copilot from the perspective of developers, as well as the causes behind them and solutions for addressing them. To this end, we conducted a thorough analysis of the problems

faced by software developers when coding with GitHub Copilot, as well as their causes and solutions, by collecting data from GitHub Issues, GitHub Discussions, and Stack Overflow (SO) posts, which would help to understand the limitations of Copilot in practical settings.

Our findings show that: (1) Operation Issue and Compatibility Issue are the most common problems faced by developers, (2) Copilot Internal Error, Network Connection Error, and Editor/IDE Compatibility Issue are identified as the most frequent causes, and (3) Bug Fixed by Copilot, Modify Configuration/Setting, and Use Suitable Version are the predominant solutions.

The contributions of this work are that:

• We provided a two-level taxonomy for the problems of using Copilot in the software development practice.

• We developed a one-level taxonomy for the causes of the problems and the solutions to address the problems.

• We drew a mapping from the identified problems to their causes and solutions.

• We proposed practical guidelines for Copilot users, the Copilot team, and other researchers.

The rest of this paper is structured as follows: Section 2 presents the Research Questions (RQs) and research process. Section 3 provides the results and their interpretation. Section 4 discusses the implications based on the research results. Section 5 clarifies the potential threats to the validity of this study. Section 6 reviews the related work. Finally, Section 7 concludes this work along with the future directions.

This paper is