Artificial Intelligence ≠ Artificial Judgment

It’s 2025, and generative AI is writing poems, booking appointments, and drafting contracts. But ask it to flag a shell company funnelling illicit crypto to sanctioned entities — and it might fail, spectacularly.

In a world where financial crime is evolving faster than algorithms can keep up, the biggest myth in RegTech is that AI can “solve” compliance. Spoiler: it can’t. At least, not yet.

And for compliance professionals, this gap isn’t academic — it’s operational risk.

The Reality of AI in Compliance

AI adoption is accelerating fast. According to the Bank of England’s 2024 report on AI in financial services, 75% of firms are already using AI, and another 10% plan to adopt it within the next three years. Yet widespread usage doesn't guarantee effectiveness — especially in compliance. Many institutions still rely on rules-based detection as their primary line of defense, which may not be sufficient for modern financial crime.

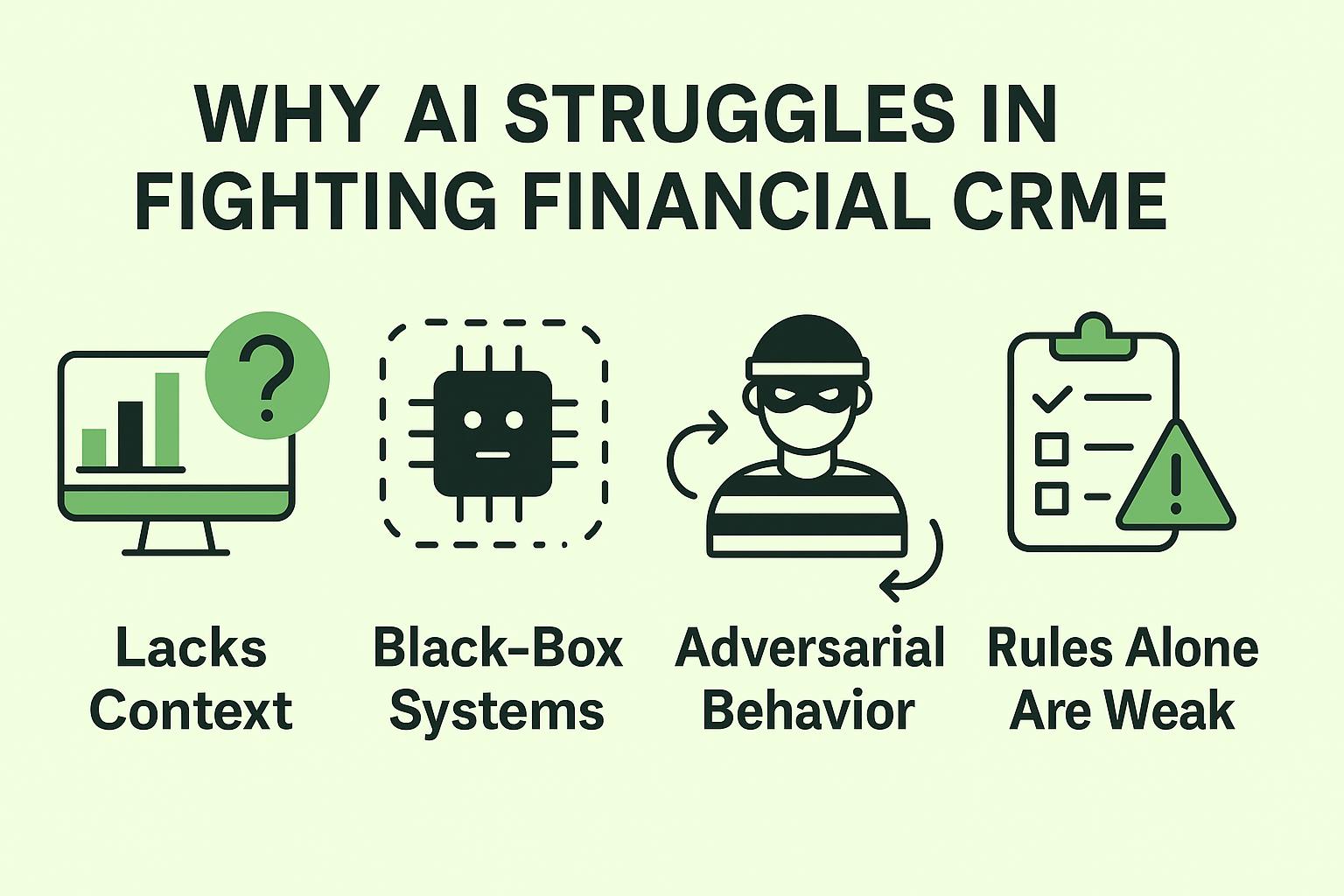

Why the disconnect?

Because while AI excels at identifying patterns, financial crime isn’t just about patterns — it’s about intent.

A money mule using a new device ID won’t trip a neural net trained on legacy fraud data. A trade-based laundering scheme routed through a “clean” jurisdiction can fly under the radar. AI needs context, and criminals are experts at removing it.

Rules-Based Systems: Still Holding the Line (For Now) Most compliance stacks today still rely on rules-based logic — "if-then" scenarios designed to trigger alerts when thresholds are breached (e.g., transactions over $10,000, dealings with sanctioned countries, unusual behavioural changes).

They’re easy to explain, regulator-approved, and relatively simple to tune. But they’re also brittle. Criminals game them with slight variations, and they generate massive volumes of alerts — the vast majority of which are false positives.

That’s why AI was introduced — to reduce the noise. But without context, AI can make different mistakes, just more quietly.

The future isn’t about replacing rules — it’s about combining them with context-aware, explainable models.

False Positives, False Negatives, and the Compliance Bottleneck

One of AI’s promises in compliance is reducing false positives. But in practice?

“The majority of participants recognise the transparency challenges relating to the use of AI/ML models.” — Deloitte EMEA Model Risk Management Survey, 2023

The choice isn't just between reducing noise and increasing efficiency — it’s between transparency and risk. If your AI tool flags an entity for suspicious activity, but your compliance officer can’t explain why, regulators will ask the questions your model can’t answer.

Financial Crime is an Adversarial System

Here’s the core challenge: AI assumes the world behaves statistically. Criminals don’t.

They probe, evade, and adapt. Every time a system detects a laundering method, a new one is created to slip past it.

In cybersecurity, this is known as an adversarial environment — a setting where attackers are continuously testing your defences. Financial crime behaves the same way. Traditional supervised learning struggles here. It’s like playing chess when your opponent secretly changes the rules after every move.

So What’s the Alternative?

Forward-thinking compliance teams are moving away from “black box” AI models toward smarter, composable risk infrastructure. That means systems that combine explainability, flexibility, and human oversight.

Here’s what that looks like in practice:

- Hybrid Explainable Systems

Models that blend rule-based logic with AI-driven prioritization. These reduce noise and preserve auditability.

Rather than replacing existing rules engines, leading compliance teams are building hybrid infrastructures that allow analysts to see why a transaction was flagged, what data influenced the decision, and how it aligns with policy.

This guide outlines key strategies for improving AI explainability in compliance systems — from model design to regulatory alignment.

- Real-Time Screening & Triage

Batch processing creates latency. Modern systems assess transactions and counterparties in real time, improving both effectiveness and response speed.

- Human-in-the-Loop AI

Without regular feedback from analysts, AI models drift. Smart compliance leaders are embedding humans at key points in the process to continuously train and improve system performance.

- Adversarial Testing & Red-Teaming

Just like in cybersecurity, simulated criminal behavior can be used to test the robustness of your AML systems. This helps you discover blind spots before bad actors do.

What Compliance Teams Should Do Now

If you're building or buying AI systems for compliance in 2025, here's what to prioritize:

- Don’t just automate — contextualize - A high-performing model without risk context is just an expensive noise generator.

- Demand explainability from your vendors - You should be able to trace why a model flagged or ignored a transaction — down to the data feature.

- Invest in observability, not just performance. Regulators will increasingly expect evidence that your systems are being monitored, maintained, and stress-tested.

- Layer your defence - No single model or rule engine will catch everything. A composable system of tools, rules, and people is your best chance at resilience.

Final Thought: It’s Not Just About AI — It’s About System Design AI is a powerful tool, but in isolation, it’s not enough. The future of compliance lies in modular, explainable, real-time systems that bring together rule logic, AI insight, and human expertise to fight adversarial behavior at scale.

Until then, the best compliance professionals won’t just use AI — they’ll interrogate it.

Sources & Further Reading:

True Cost of Financial Crime Compliance Global Study, 2023

Deloitte EMEA Model Risk Management Survey (2023)

FATF Guidance on the Risk-Based Approach to Combating Money Laundering

Improving AI Explainability: Key Strategies for Compliance & RegTech

Bank of England, Artificial Intelligence in UK Financial Services 2024