Table Of Links

3 RESULTS, DISCUSSION AND REFERENCES

RESULTS

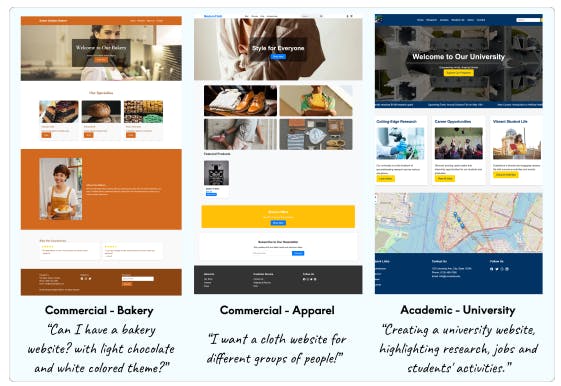

The generated websites, as illustrated in Figure 3, exhibit generally satisfactory visual appearances. These include contextually appropriate textual content, imagery, color schemes, layouts, and

functionalities. Those results align with our "intent-based" objective of only requiring users to express their intent and scaffolding Generative AI to deliver the final output, potentially reducing the communication costs between users and Generative AI systems. This task transition paradigms may motivate further exploration of intent-based interfaces, potentially extending to more complex tasks with interdependent components such as video generation.

For example, we might envision an abstract-to-detailed task transition process for generating video advertisements that begins with sketches and thematic inputs, transitions to script writing, then proceeds to generate textual and visual descriptions of storyboards, followed by video generating end editing, and culminating in iterative video refinement. We aim to further investigate the potential of intent-based user interfaces in streamlining complex, interdependent workflows across various domains.

Future work could focus on studies empirically validating the effectiveness of this task transition approach in more diverse and complex task environments. Additionally, research into optimizing the task transition process and enhancing the quality of inter-task communication may yield improvements in the overall performances.

REFERENCES

[1] John Joon Young Chung, Wooseok Kim, Kang Min Yoo, Hwaran Lee, Eytan Adar, and Minsuk Chang. 2022. TaleBrush: Visual Sketching of Story Generation with Pretrained Language Models. In CHI Conference on Human Factors in Computing Systems Extended Abstracts. ACM, New Orleans LA USA, 1–4. https://doi.org/10. 1145/3491101.3519873

[2] Zijian Ding. 2024. Advancing GUI for Generative AI: Charting the Design Space of Human-AI Interactions through Task Creativity and Complexity. In Companion Proceedings of the 29th International Conference on Intelligent User Interfaces. ACM, Greenville SC USA, 140–143. https://doi.org/10.1145/3640544.3645241

[3] Zijian Ding. 2024. Towards Intent-based User Interfaces: Charting the Design Space of Intent-AI Interactions Across Task Types. arXiv preprint arXiv:2404.18196 (2024).

[4] Zijian Ding and Joel Chan. 2023. Mapping the Design Space of Interactions in Human-AI Text Co-creation Tasks. http://arxiv.org/abs/2303.06430 arXiv:2303.06430 [cs].

[5] Zijian Ding and Joel Chan. 2024. Intelligent Canvas: Enabling Design-Like Exploratory Visual Data Analysis through Rapid Prototyping, Iteration and Curation. arXiv preprint arXiv:2402.08812 (2024).

[6] Zijian Ding, Alison Smith-Renner, Wenjuan Zhang, Joel R. Tetreault, and Alejandro Jaimes. 2023. Harnessing the Power of LLMs: Evaluating Human-AI Text Co-Creation through the Lens of News Headline Generation. http: //arxiv.org/abs/2310.10706 arXiv:2310.10706 [cs].

[7] Zijian Ding, Arvind Srinivasan, Stephen Macneil, and Joel Chan. 2023. Fluid Transformers and Creative Analogies: Exploring Large Language Models’ Capacity for Augmenting Cross-Domain Analogical Creativity. In Proceedings of the 15th Conference on Creativity and Cognition (C&C ’23). Association for Computing Machinery, New York, NY, USA, 489–505. https://doi.org/10.1145/3591196.3593516

[8] Jakob Nielsen. 2023. AI: First New UI Paradigm in 60 Years. Nielsen Norman Group 18, 06 (2023), 2023.

[9] Chenglei Si, Yanzhe Zhang, Zhengyuan Yang, Ruibo Liu, and Diyi Yang. 2024. Design2Code: How Far Are We From Automating Front-End Engineering? http: //arxiv.org/abs/2403.03163 arXiv:2403.03163 [cs].

[10] Jason Wu, Eldon Schoop, Alan Leung, Titus Barik, Jeffrey P. Bigham, and Jeffrey Nichols. 2024. UICoder: Finetuning Large Language Models to Generate User Interface Code through Automated Feedback. http://arxiv.org/abs/2406.07739 arXiv:2406.07739 [cs].

[11] Chen Zhu-Tian, Zeyu Xiong, Xiaoshuo Yao, and Elena Glassman. 2024. Sketch Then Generate: Providing Incremental User Feedback and Guiding LLM Code Generation through Language-Oriented Code Sketches. http://arxiv.org/abs/ 2405.03998 arXiv:2405.03998 [cs].

This paper is