The failure wasn’t technical

When you’re responsible for rolling out AI inside a company, it’s easy to focus on the visible parts.

Models. Connectors. Retrieval. Permissions. Latency.

That’s the work everyone sees. But that’s not why my rollout struggled.

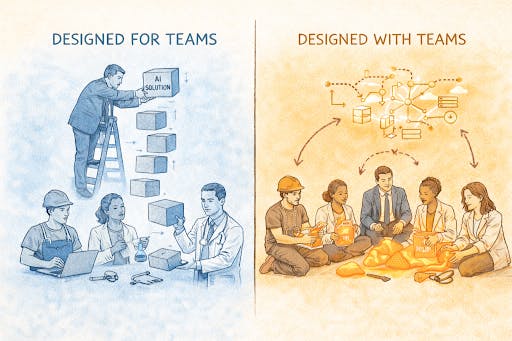

It didn’t fail because the system was broken. It failed because I was designing AI for teams instead of with them.

At Nextdoor, our early Work AI rollout stalled even though the technology was solid. Accuracy was fine. Performance was fine. Nothing was “on fire.”

What was off were our assumptions about how work actually happened.

When “good on paper” falls apart in real workflows

We started with a problem most companies recognize. Knowledge was everywhere: Slack threads, Confluence pages, Google Drive folders, Jira tickets, Salesforce records, GitHub repos, PagerDuty incidents.

Access wasn’t the issue. Context was.

So we built an enterprise search and AI layer to unify it all. From a technical standpoint, it did what it was supposed to do.

Indexing worked. Permissions were respected. Answers were usually correct. And yet adoption split down the middle.

Some teams used it every day. Others barely opened it. At first, we treated this like a training gap. More demos. Better docs. Clearer examples.

But the feedback we kept hearing pointed to something else.

“It’s helpful, but it’s not how we actually work.”

That sentence came up again and again.

The assumption I didn’t realize I was making

Without meaning to, I had designed the system around a clean, universal workflow.

One way to search.

One version of the answer.

One shared definition of “done.”

That’s not how companies work.

Engineering searches differently than Sales. Trust & Safety relies on patterns that rarely make it into documentation. Revenue Ops branches workflows based on region, timing, and account tier. HR answers the same question differently depending on who’s asking.

Our AI assumed a neat taxonomy. Teams lived in edge cases.

Queries returned the “right” document but missed the Slack thread that explained why that document mattered. The agent handled most requests just fine. But the cases it missed were the ones that paged someone at 2 a.m. Those were the moments teams cared about most. Teams asked for multiple versions of the same agent because role context mattered more than global correctness.

The system wasn’t wrong. It was misaligned.

The turning point: building together

The shift happened during a working session with a team that had been waiting for their first production agent.

I showed up ready to walk them through what we’d built. I didn’t get very far.

Within minutes, the gaps surfaced.

“This assumes we search by project name. We don’t.”

“We need two outputs. One for on-call, one for postmortems.”

“This misses the stuff people only explain verbally.”

We stopped presenting and started mapping their actual workflow. Where signals really came from. Where humans stepped in because systems failed. Where context lived outside any tool.

We rebuilt the agent together!!

Signals → Agent → Human Judgment → Feedback → Agent

The changes themselves weren’t dramatic. Retrieval weights shifted. Prompts branched by role. Incomplete data was handled explicitly instead of ignored. Agents were versioned instead of forced into a single definition.

What changed immediately was adoption.

Co-creation turned out to be the fix

After that session, we changed how we approached enablement entirely.

Slack became the place where ideas and friction surfaced in real time. Office hours turned into build sessions instead of walkthroughs. Successful agents became starting points, not “finished” solutions.

A loop formed naturally: ask, try, adjust, repeat.

That loop mattered more than any single feature we shipped.

What changed once teams shaped the system

Once teams were involved in shaping the system, creativity picked up fast.

Engineers pulled incident context from PagerDuty, GitHub, and Confluence without chasing links. Revenue Ops automated enrichment work that used to take hours of manual cross-checking. Sales teams pulled together client context before meetings without switching tools. HR turned open enrollment into something employees could navigate on their own. Trust & Safety teams spent more time on judgment and less on triage.

None of this came from a central roadmap.

It came from people who finally saw the system as something they could influence.

From resistance to ownership

The tone of feedback changed in a way I didn’t expect.

Early on, feedback sounded like:

“This isn’t working.”

Later, it sounded more like: “If we adjust retrieval here, this edge case goes away.”

Adoption stopped being something we pushed for. It became a side effect of relevance.

What Changed After We Let Go of Control

I assumed one workflow would work across teams. The moment Trust & Safety rejected the agent outright, I realized that assumption was the real failure.

I used to think collaboration meant getting buy-in after shipping. The Trust & Safety rejection made it obvious that collaboration had to happen before anything shipped.

Don’t optimize for correctness in isolation. Optimize for usefulness in context. We’re moving toward a model where AI literacy is expected, not exceptional.

Each function has enablement champions who understand both the tools and the reality of their work. The goal isn’t an AI center of excellence.

It’s an organization that knows how to think alongside machines.

Final thought

The fastest way to scale AI isn’t to build more agents.

It’s to build more builders.

Once teams realize they’re allowed to shape the system, improvement doesn’t need to be managed. It sustains itself.

That’s when AI stops being a project and starts becoming part of how work actually gets done.