Small Language Models (SLMs) are compact neural architectures – typically ranging from tens to a few hundred million parameters – built for clearly defined, domain-specific tasks. Unlike frontier LLMs designed for broad, open-ended reasoning, SLMs focus on narrow competencies such as rubric-aligned grading, structured feedback, or consistent subject-specific explanations. This specialization enables them to operate with dramatically lower computational overhead while maintaining, and in some cases surpassing, the accuracy of much larger models.

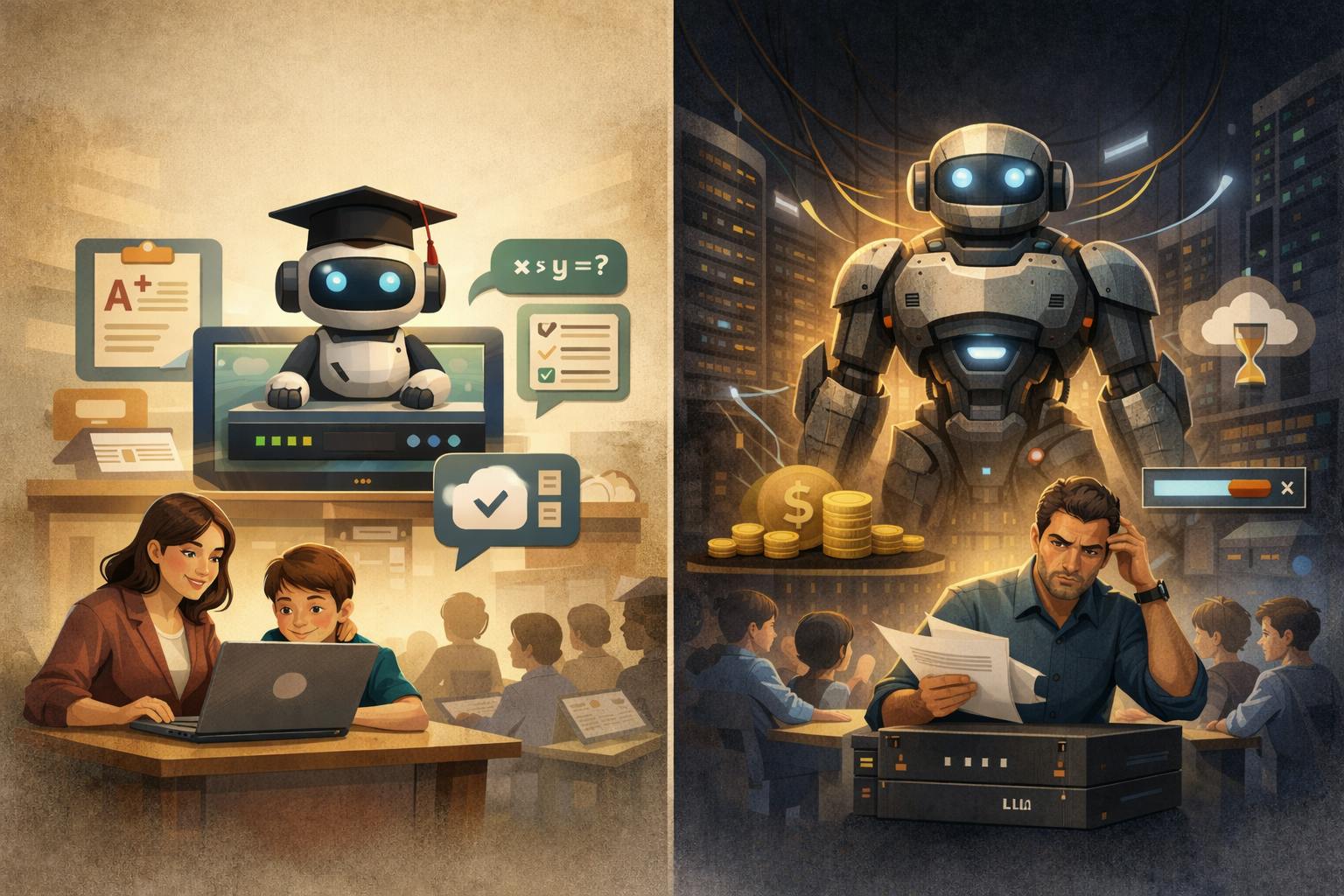

Massive LLMs are undeniably powerful, but their scale introduces trade-offs that make them less suitable for classroom environments. They are expensive: inference on models with 70B-400B parameters requires substantial hardware or costly API usage. For instance, GPT-4-class systems can cost 10-20x more per token than smaller open-source models (<13B parameters) running on modest, local hardware. They

This is where the targeted nature of

Why Are SLM’s Better Suited For Educational Tasks?

Large LLMs introduce latency at multiple stages of inference: model loading, token-by-token generation, and network round-trip time to remote servers. In practical terms, this means that responses can take seconds rather than milliseconds, and delays compound sharply under batch usage – such as a teacher grading 30 essays simultaneously or a class of students submitting prompts at once. Even with aggressive optimization, frontier models require substantial GPU memory and bandwidth, making low-latency performance difficult to guarantee in real-world school environments.

SLMs avoid most of the latency issues that slow down large models. Because they contain far fewer parameters, they start up quickly and generate outputs much faster, even on basic CPUs or low-cost school servers. When an SLM runs locally, on a classroom laptop or an on-premise machine, there’s no need to send data over the internet, which removes network delays entirely. In practice, this means students and teachers get responses almost instantly, making SLMs far better suited for real-time tasks like grading, feedback, or writing support during a class session

In Which Specific Tasks Do SLM’s Achieve The Same High Performance As LLM’s?

SLMs demonstrate near-LLM performance –

In essay scoring and rubric-based grading, SLMs fine-tuned on subject-specific criteria can deliver consistent, reproducible evaluations that closely track LLM-level scoring, but at 3–5× lower inference cost. Their ability to encode rubric logic directly into model behavior makes them particularly reliable for high-volume assessment workflows.

For structured feedback generation – including math explanations, lab-report commentary, and reading-comprehension guidance – SLMs excel at producing step-by-step, curriculum-aligned feedback. Their narrower scope reduces variability and hallucinations, offering educators more predictable outputs compared to general-purpose LLMs.

In academic writing support, domain-tuned SLMs handle paraphrasing, grammar correction, and localized revision suggestions with high precision, without the latency overhead or operational cost associated with large-scale models. Similarly, in multiple-choice and short-answer assessment, SLMs trained on existing datasets match LLM-level accuracy in answer selection and explanation generation – an essential requirement for scalable automated testing.

Engineering Perspective: Grading Accuracy And Reliability

From a technical perspective, SLMs are engineered to deliver consistent and reliable performance, which is essential for educational grading. By narrowing their scope to specific subjects and structured input formats, SLMs produce far less variation in their outputs – meaning similar assignments receive similar evaluations. This targeted design enables models that are both lightweight and dependable, without sacrificing the accuracy required for high-stakes assessments.

Empirical tests

Thanks to this balance between efficiency and precision, SLMs are especially practical for educational institutions. They deliver real-time grading and feedback at 3-5x lower cost and latency compared with LLMs, making it feasible for schools to integrate AI into daily workflows without heavy infrastructure investments or prohibitive operational expenses.

Building Trust And Accessibility With Slms

SLMs naturally enhance trust and accessibility in educational settings because they are far simpler to deploy and manage than massive LLMs. Their compact size eliminates the need for costly servers, high-end GPUs, or large cloud contracts, reducing infrastructure overhead and making AI-powered tools realistic even for schools with limited budgets.

SLMs also deliver substantially faster response times, improving the user experience for both teachers and students. While large LLMs can take several seconds or longer to produce outputs, especially under heavy load, SLMs generate results almost instantly on modest hardware. This rapid feedback loop keeps classroom workflows fluid and makes the system feel more reliable and responsive, reinforcing confidence in daily use.

Transparency and auditability represent another key advantage. Smaller, task-focused models are easier to inspect, document, and validate than massive LLMs. Educators can trace how scores or feedback were generated, an essential requirement for trust in automated grading and academic support. By combining lower operational costs, faster performance, and greater interpretability, SLMs make AI tools both more accessible and more credible for real-world classroom environments.

What Are The Long-Term Implications Of This Trend?

The rise of SLMs suggests that in education, precision and task alignment may matter more than scale. Models tailored to specific subjects, rubrics, or classroom activities appear capable of reaching accuracy levels close to those of large LLMs, while remaining faster, more affordable, and easier to deploy. This trend may challenge the long-held assumption that “bigger is always better”, hinting instead that AI designed around real-world teaching needs could offer more practical value.

Looking ahead, it seems likely that educational AI will continue moving toward highly specialized, task-focused models. Future SLMs could become even more efficient – potentially supporting complex grading, feedback, and tutoring while staying lightweight and interpretable. If this trajectory continues, schools may increasingly choose such models for everyday instruction, fostering an ecosystem where speed, transparency, and accessibility take precedence over raw model size.